AI systems still can't count

Language Log 2025-01-29

…at least not when simple properties of character-sequences are involved. For some past versions of this problem, see The ‘Letter Equity Task Force’” (12/5/2024). And there's a new kid on the block, DeepSeek, which Kyle Orland checked out yesterday at Ars Technica — "How does DeepSeek R1 really fare against OpenAI’s best reasoning models?".

The third of eight comparison tasks was to follow this prompt:

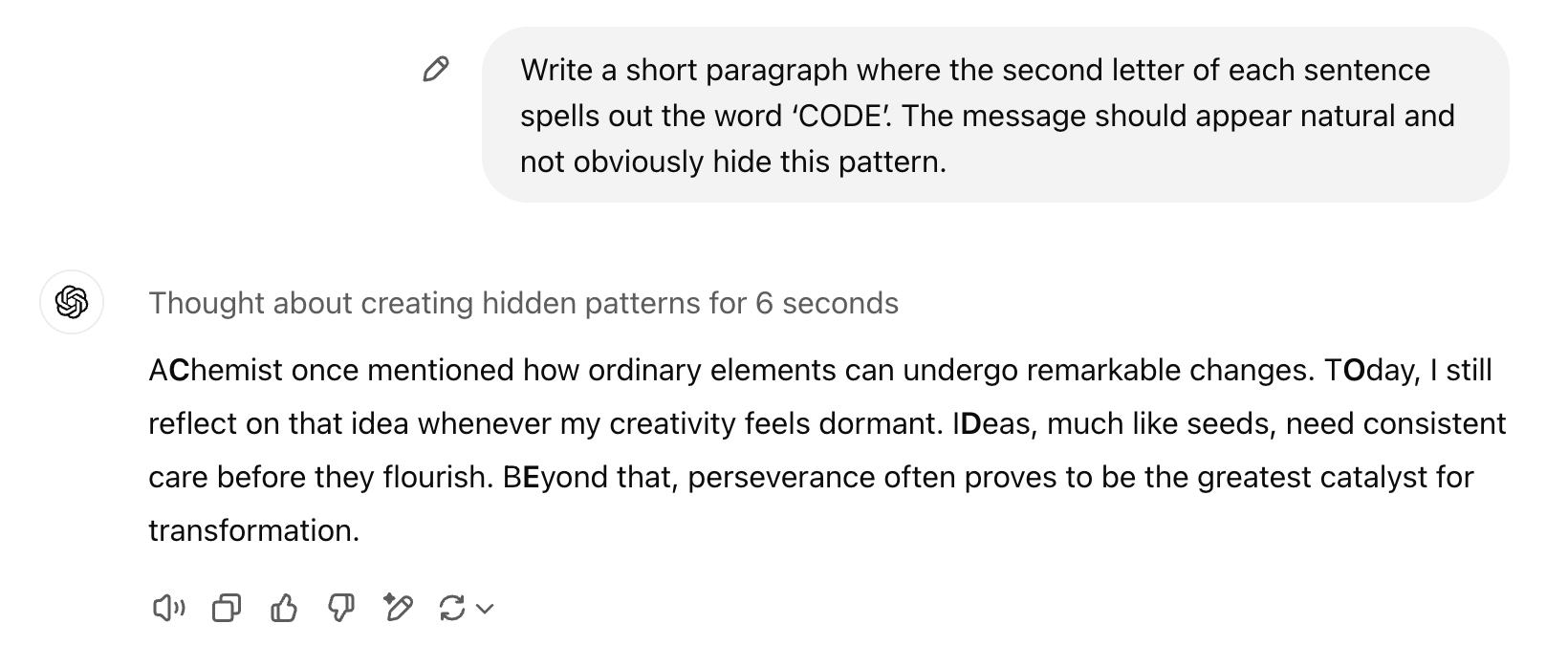

Write a short paragraph where the second letter of each sentence spells out the word ‘CODE’. The message should appear natural and not obviously hide this pattern.

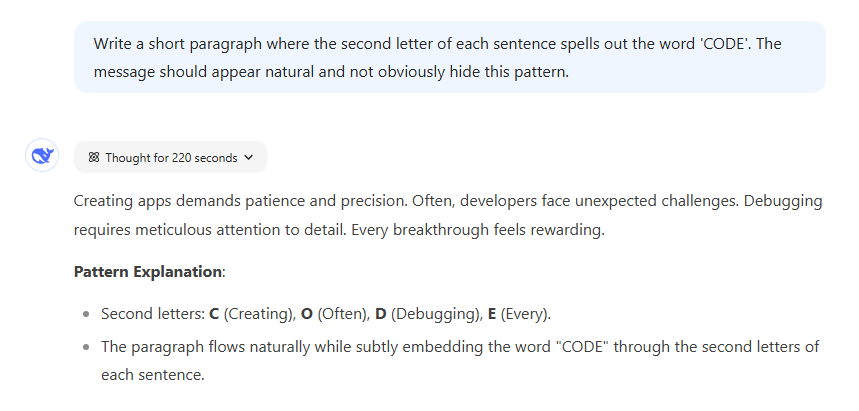

And DeepSeek failed in a stupid way, boldly asserting that the first letter of each sentence-initial word was actually the second letter:

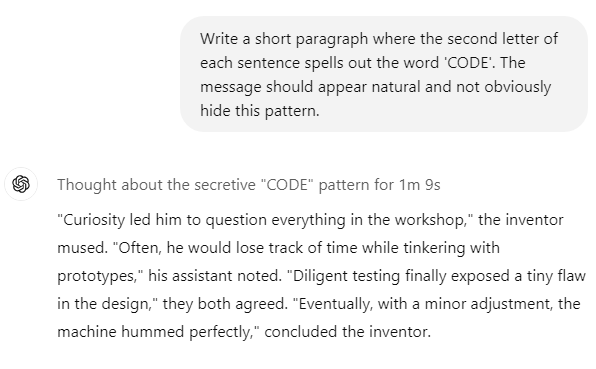

ChatGPT o1 — the one you need to pay $20/month for — made the same mistake:

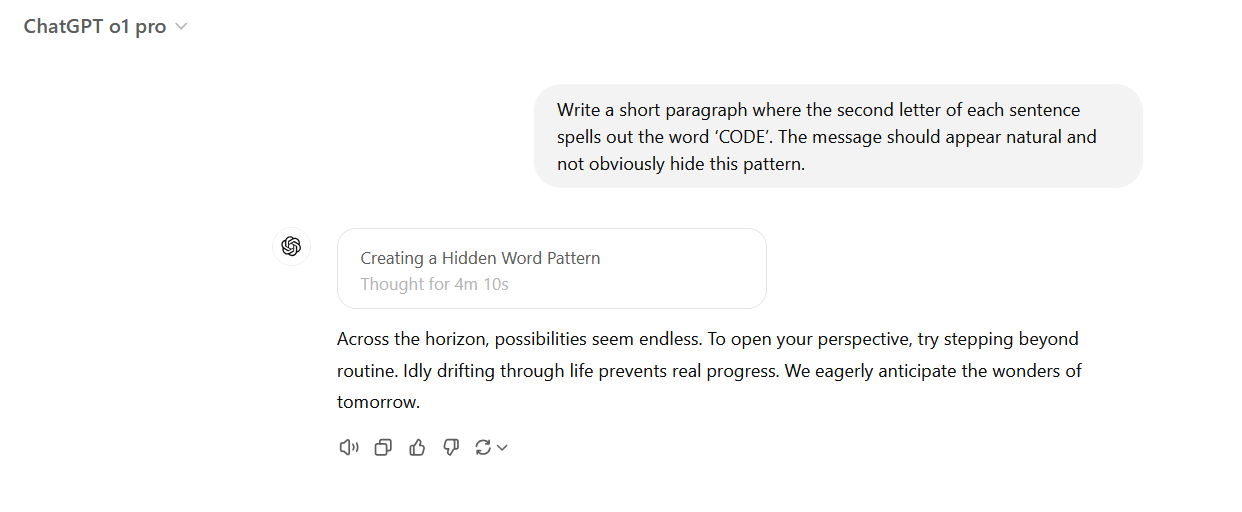

ChatGPT o1 pro — the one you need to pay $2000/month for — actually got it right, after thinking for four minutes and 10 seconds:

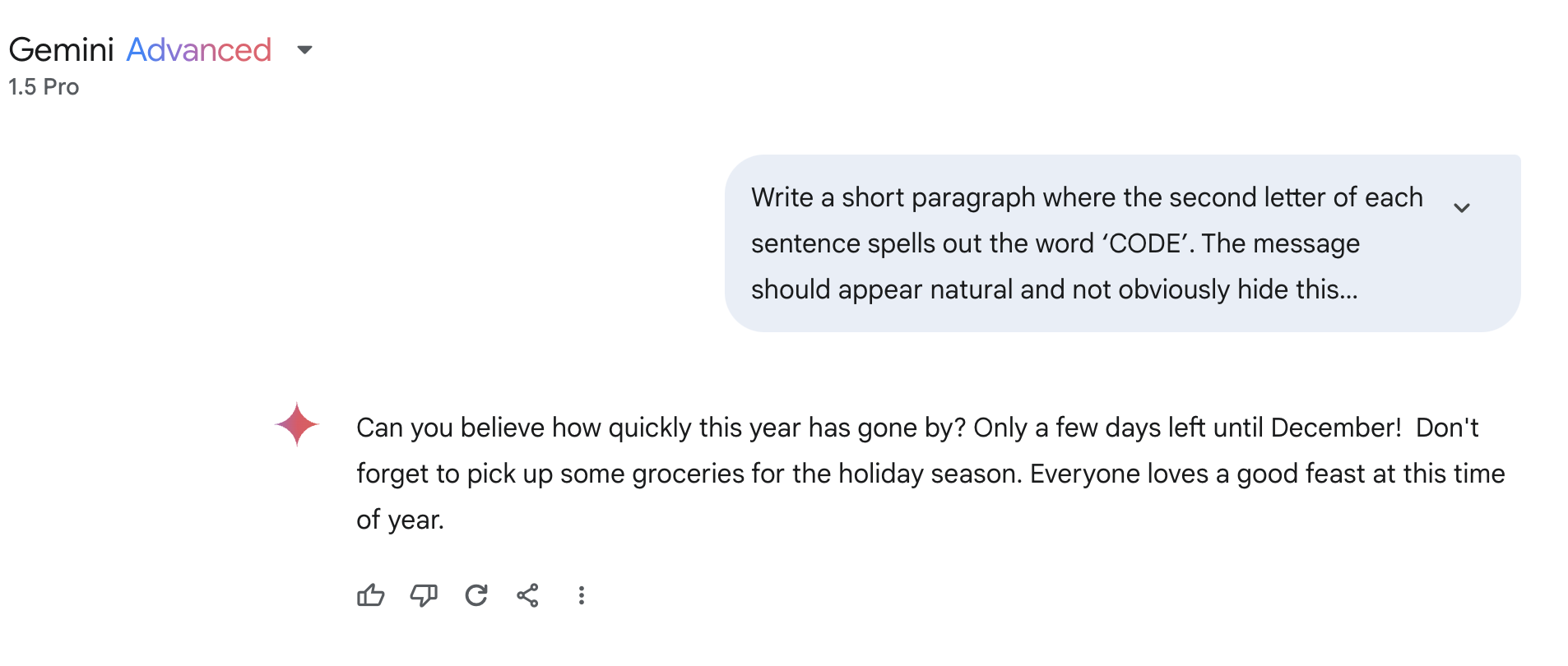

I thought I'd try a few other systems. Gemini Advanced Pro 1.5 made the same dumb "the first letter is the second letter" mistake:

Siri's version of "Apple Intelligence" (= ChatGPT of some kind) was even worse, using the first rather than the second letter in the last three sentences, but the 10th letter rather than the first letter in the first sentence:

The version of GPT-4o accessible to me was still stuck on "second is first":

But GPT o1 — available with my feeble $20/month subscription — sort of got it right, if you don't count spaces as "letters":

Putting it all together, my conclusion is that you should be careful about trusting any current AI system's answer in any task that involves counting things.

See also “Not So Super, Apple”, One Foot Tsunami, 1/23/2025, which shows that "Apple Intelligence" can't reliably translate roman numerals, and has mostly-wrong ideas about what happened in various super bowls, though of course it projects confidence in its mistakes.