Cumulative syllable-scale power spectra

Language Log 2019-06-11

Babies start making speech-like vocalizations long before they start to produce recognizable words — various stages of these sounds are variously described as cries, grunts, coos, goos, yells, growls, squeals, and "reduplicated" or "variegated" babbling. Developmental progress is marked by variable mixtures of variable versions of these noises, and their analysis may provide early evidence of later problems. But acoustic-phonetic analysis of infant vocalizations is hindered by the fact that many sounds (and sound-sequences) straddle category boundaries. And even for clear instances of "canonical babbling", annotators often disagree on syllable counts, making rate estimation difficult.

In "Towards automated babble metrics" (5/26/2019), I toyed with the idea that an antique work on instrumental phonetics — Potter, Koop and Green's 1947 book Visible Speech — might have suggested a partial solution:

By recording speech in such a way that its energy envelope only is reproduced, it is possible to learn something about the effects of recurrences such as occur in the recital of rimes or poetry. In one form of portrayal, the rectified speech envelope wave is speeded up one hundred times and translated to sound pattern form as if it were an audible note.

Since then, a bit of looking around in the literature turned up some interesting recent explorations of a similar idea: Sam Tilsen and Keith Johnson, "Low-frequency Fourier analysis of speech rhythm", JASA 2008; Sam Tilsen and Amalia Arvaniti, "Speech rhythm analysis with decomposition of the amplitude envelope: characterizing rhythmic patterns within and across languages", JASA 2013.

What I've done is a little different from the (analog) method discussed in Visible Speech, and also somewhat different from the various (digital) methods discussed in the Tilsen et al. works, though I suspect that the differences are not really critical, at least for the applications that I have in mind. For those who care about the details, I calculated the RMS amplitude of the speech signal, using a 25-millisecond window advanced 5 milliseconds at a time (so sampled at 200 Hz); I then smoothed the result by convolving with the derivative of a gaussian with a standard deviation of 70 milliseconds; and finally I calculated the power spectrum in a 2-second (or 3-second) hamming window, advanced 1 second at a time, and averaged those spectra over the whole of a selected recording. (Source code available on request.)

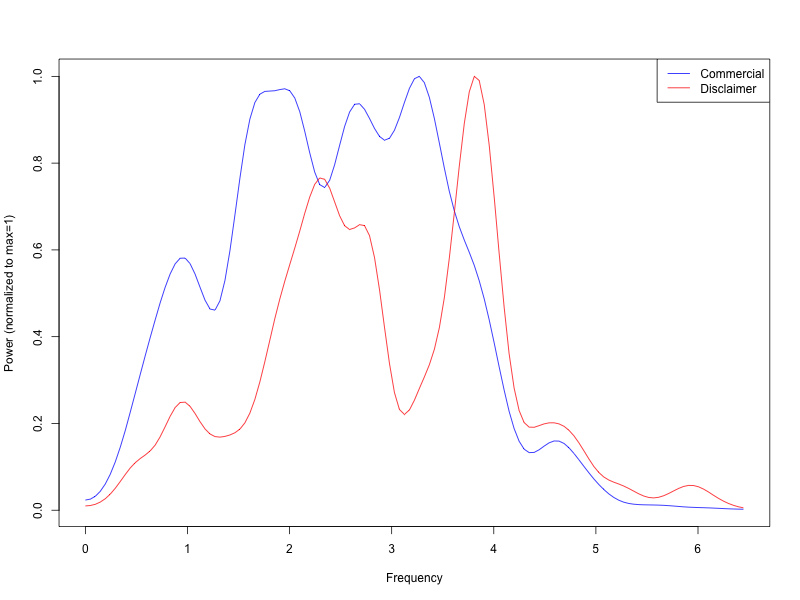

Here's the result, using a three-second analysis window, for the first 15 seconds of a car commercial: Your browser does not support the audio element.

and for this typically-rapid disclaimer:

Your browser does not support the audio element.

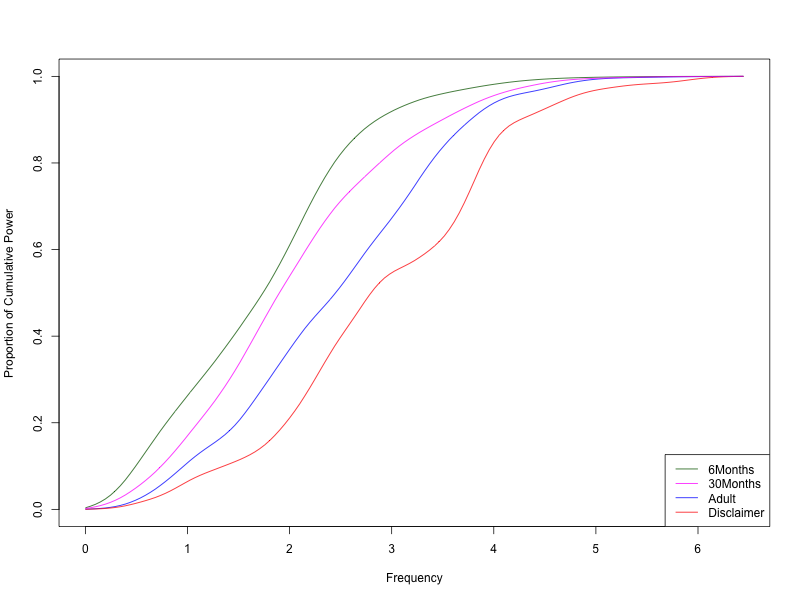

That looks sensible — but how can we reduce those sensible-looking wiggles to something more statistically tractable? One obvious idea is to look at the cumulative version. And let's add two other sources — this babble sample:

Your browser does not support the audio element.

and this recording of a (highly verbal) 2-year-old:

Your browser does not support the audio element.

The cumulative spectral power for all four:

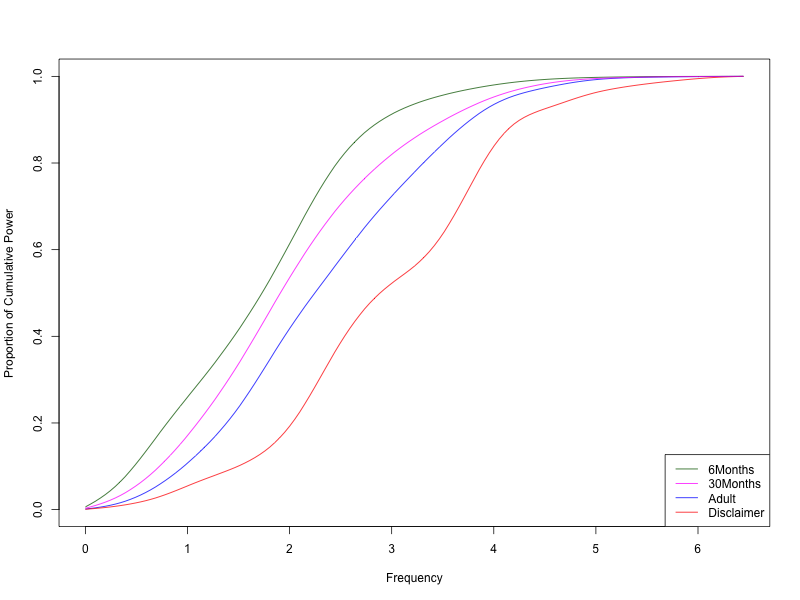

Here's the same thing using a two-second (rather than three-second) analysis window:

Four swallows don't make a summer. But this strikes me as (the start of) a promising way to quantify syllable-scale speaking rate in recordings where the notion of "syllable" may not be well defined.