Is language "analog"?

Open Access Now 2016-07-22

David Golumbia's 2009 book The Cultural Logic of Computation argues that "the current vogue for computation" covertly revives an "old belief system — that something like rational calculation might account for every part of the material world, and especially the social and mental worlds". Golumbia believes that this is a bad thing.

I have nothing to say here about the philosophical or cultural impact of computer technology. Rather, I want to address a claim (or perhaps I should call it an assumption) that Golumbia makes about speech and language, which I think is profoundly mistaken.

Golumbia hangs a lot of significance on the word analog, which he uses (without defining it) in the sense "continuously variable", putting it thereby in opposition to the digital representations that modern computers generally require.

His first use of analog occurs in the following context:

Each citizen can work out for himself the State philosophy: "Always obey. The more you obey, the more you will be master, for you will only be obeying pure reason. In other words yourself …

Ever since philosophy assigned itself the role of ground it has been giving the established powers its blessing, and tracing its doctrine of faculties onto the organs of State power. To submit a phenomenon to computation is to striate otherwise-smooth details, analog details, to push them upwards toward the sovereign, to make only high-level control available to the user, and then only those aspects of control that are deemed appropriate by the sovereign (Deleuze 1992).

His other 28 uses of analog present a consistent picture: the world is analog; representing it in digital form is a critical category change that leads to philosophical error and serves the interests of hierarchical social control.

From his introductory chapter:

Human nature is highly malleable; the ability to affect what humans are and how they interact with their environment is one of my main concerns here, specifically along the lines that computerization of the world encourages computerization of human beings. There are nevertheless a set of capacities and concerns that characterize what we mean by human being: human beings typically have the capacity to think; they have the capacity to use one or more (human) languages; they define themselves in social relationship to each other; and they engage in political behavior. These concerns correspond roughly to the chapters that follow. In each case, a rough approximation of my thesis might be that most of the phenomena in each sphere, even if in part characterizable in computational terms, are nevertheless analog in nature. They are gradable and fuzzy; they are rarely if ever exact, even if they can achieve exactness. The brain is analog; languages are analog; society and politics are analog. Such reasoning applies not merely to what we call the "human species" but to much of what we take to be life itself, […]

But crucial aspects of human speech and language are NOT "analog" — are not continuously variable physical (or for that matter spiritual) quantities. This fact has nothing to do with computers — it was as true 100 or 1,000 or 100,000 years ago as it is today, and it's been recognized by every human being who ever looked seriously at the question.

There are three obvious non-analog aspects of speech and language. In linguistic jargon, these are the entities involved in syntax, morphology, and phonology. In more ordinary language, they're phrases, words, and speech sounds.

Let's start in the middle, with words.

In English, we have the words red and blue; in French, rouge and bleu. In neither language is "purple" some kind of sum or average or other interpolation between the two. The pronunciation of a word is infinitely variable; but a word's identity is crisply defined. It's (the psychological equivalent of) a symbol, not a signal — and thereby it's not "gradable and fuzzy", it's not part of some "smooth" continuum of entities, it's an entity that's qualitatively distinct from other entities of the same type.

This is not just some invention of scribes and linguists. Pre-literate children have no trouble with word constancy — they easily recognize when a familiar word recurs. And speakers of a language without a generally-used written form have the same ability.

Nor is this property of words functionally irrelevant — the possibility of communication relies crucially on the fact that each of us knows thousands of words that are distinct, individually recognizable, and generally arbitrary in their relationship to their meanings. No coherent account of the human use of speech and language is possible without recognizing this fact. (We'll also need a story about how words can be made up of the pieces that linguists call "morphemes", and a story about how word-sequences sometimes come to have non-compositional meanings. But none of that will erase the essentially discrete and symbolic nature of the system's elements.)

What about phonology? Again, we know that a spoken "word" is not just an arbitrary class of vocal noises. Each (variety of a) language has a phonological system, whereby a simple combination of a small finite set of basic elements defines the claims that each word makes on articulations and sounds. This phonological principle is what makes alphabetic writing possible.

The inventory of basic elements and the patterns of combination varies from language to language. And the pronunciation of a word or phrase is infinitely variable, and this phonetic variation carries additional information about speakers, attitudes, contexts, and so on. But phonology itself is again a crucially symbolic system — its representations are not "gradable and fuzzy". And if this serves to "striate otherwise-smooth details, analog details" and thereby "to push them upwards toward the sovereign", it's something that happened whenever human spoken language was invented, probably hundreds of thousands of years ago, and certainly long before anyone dreamt of digital computers.

Like the nature of words as abstract symbols, the symbolic nature of phonology is not functionally irrelevant. In a plausible sense of the word "word", an average 18-year-old knows tens of thousands of them. Most of these are learned from experiencing their use in context, often just a handful of times. Experiments by George Miller and others, many years ago, showed that young children can often learn a (made-up) word from one incidental use.

Given the infinitely variable nature of spoken performance, the whole system would be functionally impossible if "learning a word" required us to learn to delimit an arbitrary region of the space of possible vocal noises. The fact that words are spelled out phonologically means that every instance of every spoken word helps us to learn the phonological system, which in turn makes it easy for us to learn — and share with our community — the pronunciation of a very large set of distinctly different word-level symbols.

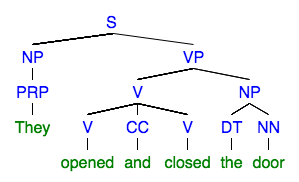

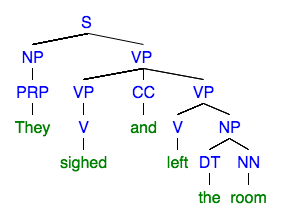

Finally, what about phrase structure? Another crucial and universal fact about human language is that complex messages can be created by combining simpler ones. And this combination is not just one thing after another. Different structural combinations have different meanings — in hierarchical patterns that have nothing to do with relations of social dominance. The difference between conjoined verbs both governing the same direct object ("opened and closed the door") and an intransitive verb conjoined with a verb-object combination ("sighed and left the room") is an example — and like other such distinctions, it's a discrete one, not "fuzzy and gradable":

There are lots of different ideas about exactly what the relevant distinctions are, much less how to write them down; and under any account, particular word-sequences are often ambiguous. But this is because there are multiple possible structures, and not because these structures are arbitrary "striations" of a continuous space.

That's enough, and too much, for today. But let me note in passing that the idea of "[making] make only high-level control available to the user" has been developed at length in a very different context, with little to do with language and nothing at all to do with computation. I'm referring to the Degrees of Freedom Problem, also known as the Motor Equivalence Problem, first suggested by Karl Lashley ("Integrative Functions of the Cerebral Cortex", 1933), and developed further by Nicolai Bernstein (The Coordination and Regulation of Movement, 1967):

It is clear that the basic difficulties for co-ordination consist precisely in the extreme abundance of degrees of freedom, with which the [nervous] centre is not at first in a position to deal.

Turvey ("Coordination", American Psychologist 1990) observes that

As characteristic expressions of biological systems, coordinations necessarily involve bringing into proper relation multiple and different component parts (e.g., 1014 cellular units in 103 varieties), defined over multiple scales of space and time. The challenge of properly relating many different components is readily illustrated. Muscles act to generate and degenerate kinetic energy in body segments. There are about 792 muscles in the human body that combine to bring about energetic changes at the skeletal joints. Suppose we conceptualize the human body as an aggregate of just hinge joints like the elbow. It would then comprise about 100 mechanical degrees of freedom, each characterizable by two states, position and velocity, to yield a state space of, minimally, 200 dimensions.

and expresses Bernstein's idea succinctly as

Each and every movement comprises a state space of many dimensions; the problem of coordination, therefore, is that of compressing such high-dimensional state spaces into state spaces of very few dimensions.

Or, as Golumbia and Deleuze put it, to "make … high-level control available to the user". But here the goal is not to limit options to "those aspects of control that are deemed appropriate by the sovereign", but simply to make multicellular life possible.

Current thinking about this problem involves interesting properties of non-linear dynamical systems — for an example that starts to bring language-like concepts into the picture, see e.g. Aaron Johnson and Dan Koditschek, "Towards a Vocabulary of Legged Leaping", IEEE ICRA 2013.

Finally, my dreamtime colleague Mikhail Bakhtin would want me to note Golumbia's heteroglossic echo of Frederic Jameson's 1991 book Postmodernism or, The Cultural Logic of Late Capitalism.