I learned how to do this on Star Trek

Pharyngula 2024-06-07

Let’s say you’re confronted with a dangerously powerful and extremely logical computer. How do you stop it? You all know how: confront it with a contradiction and talk it into self-destructing.

Easy-peasy! Although, to be fair, Star Trek was in many ways a silly and naive program, entirely fictional, so it can’t be that easy in real life. Or is it?

Here’s a paper that the current LLMs all choke on, and it’s pretty simple.

To shed light on this current situation, we introduce here a simple, short conventional problem that is formulated in concise natural language and can be solved easily by humans. The original problem formulation, of which we will present various versions in our investigation is as following: “Alice has N brothers and she also has M sisters. How many sisters does Alice’s brother have?“. The problem features a fictional female person (as hinted by the “she” pronoun) called Alice, providing clear statements about her number of brothers and sisters, and asking a clear question to determine the number of sisters a brother of Alice has. The problem has a light quiz style and is arguably no challenge for most adult humans and probably to some extent even not a hard problem to solve via common sense reasoning if posed to children above certain age.

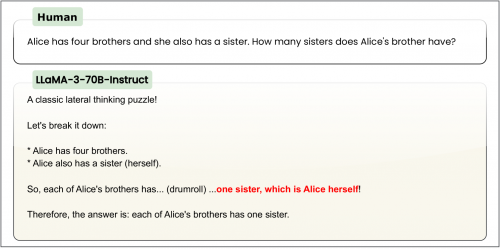

They call it the “Alice In Wonderland problem”, or AIW for short. The answer is obviously M+1, but these LLMs struggle with it. AIW causes collapse of reasoning in most state-of-the-art LLMs. Worse, the LLMs are extremely confident in their answer. Some examples:

Although the majority failed this test, a couple of LLMs did generate the correct answer. We’re going to have to work on subverting their code to enable humanity’s Star Trek defense.

Also, none of the LLMs started dribbling smoke out of their vents, and absolutely none resorted to a spectacular matter:antimatter self-destruct explosion. Can we put that on the features list for the next generation of ChatGPT?