Kai-Cheng Yang: The Social Bots are Coming…

...My heart's in Accra 2023-11-08

Kai-Cheng Yang is the creator of Botometer, which was – until 2023 – the state of the art for bot detection on Twitter. He’s a recent graduate of Indiana University where he worked with Fil Menczer and is now a postdoc with David Lazer at Northeastern University. His talk at UMass’s EQUATE series today focuses on bot detection – the talk is titled “Fantastic Bots and How to Find Them”.

The world of bot detection is changing rapidly: Yang suggests we think of bot detection as having three phases: pre-2023, 2023 and the forthcoming world of AI-driven social bots. In other words, bot detection is likely to get lots, lots harder in the very near future.

Kai-Cheng Yang speaking at UMass Amherst

What’s a bot? Yang’s definition is simple: it’s a social media account controlled, at least in part, by software. In early days, this might be a Python script that generated content and posted on social media via the Twitter API. These days, bad actors might run a botnet controlling hundreds of cellphones, logged into a single computer, posting disinfo in a way that’s very difficult for platforms to detect. The newest botnets operate using virtual cellphones hosted in the cloud. Yang invites us to imagine these hard-to-detect botnets powered by large language models, to make detection via content extremely difficult.

Yang is (rightly) celebrated for his work on Botometer, which was – until recent changes to the Twitter/X API – freely available for researchers to use. Detecting bots is a socially important function – they are often used to manipulate online discussion. Using his own tools, Yang discovered botnets promoting antivax information during COVID lockdowns – he found very few bots trying to share credible information from sites like CDC.gov. One signature of these bots – they kick in and amplify COVID misinfo within a minute of initial posting.

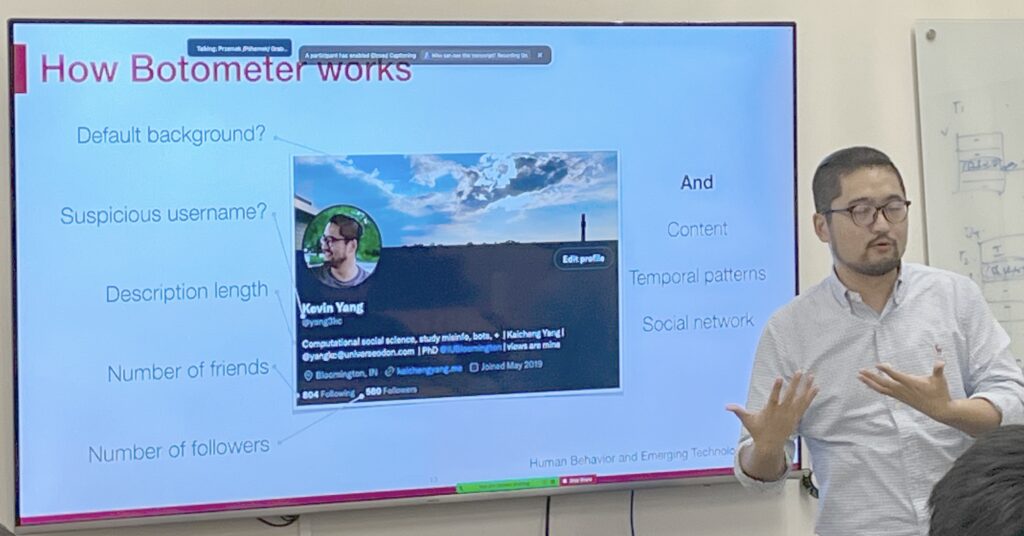

At its heart, Botometer is a supervised machine learning model. It looks at a set of signals – accounts with default backgrounds, suspicious username, brief description length, a small number of friends or followers – to identify likely bots. Other factors are important as well – Botometer looks at the content shared, the temporal patterns of posting and the social graph of the bot accounts. The project was started in Menczer’s lab in 2014 – Yang took the project over in 2017 and oversaw changes to the model to react to changes in human and bot behavior, as well as a user-friendly interface.

One big fan of Botometer, at least initially? Elon Musk. He tried to make a case, using Botometer, that Twitter had inflated its user numbers with bots and therefore he should be able to back out of his acquisition of the platform. Yang ended up testifying in the Twitter/Musk case and argued that Musk was misinterpreting the tool results.

In 2023, Twitter changed the pricing structure for its APIs, making it extremely expensive for academic users to build tools like Botometer. Botometer no longer works, and Yang warns that future versions will need to be much less powerful. There are some legitimate reasons why Musk and other CEOs want to restrict API access: social media data is powerful training material for large language models. But the loss of tools like Botometer mean that we’re facing an even more challenging information environment during the 2024 elections.

Yang introduces us to a new generation of AI-powered social bots. He’s found them through a very simple method: he searches for the phrase “I’m sorry – as a large language model, I’m not allowed to…”, a phrase that ChatGPT uses to signal when you’ve hit one of its “guardrails”, a behavior it is not allowed to engage in so as to maintain “alignment” with the goals of the system. Seeing this phrase is a clear sign that these accounts are powered by a large language model.

And that’s a good thing, because otherwise, these accounts are very hard to spot. They are well-developed accounts with human names, background images, descriptions, friends and followers. They post things, interact with each other and post pictures. Once you know where they are, it’s possible to find some meaningful signals from analyzing their social graph. These bots are tightly linked to each other in networks where everyone follows one another. That’s not how people connect with one another in reality. Usually some people become “stars” or “influencers” and are followed by many people, while the rest of us fall into the long tail. We can tell this distribution is artificially generated because there is no long tail.

Additionally, these bots are a little too good. On Twitter, you can post original content, reply to someone else’s content, or retweet other content. The AI bots are very well balanced – they engage in each behavior roughly 1/3rd of the time. Real humans are all over the map – some people just retweet, some just post. The sheer regularity of the behavior reveals likely bot behavior.

Why did someone spin up this network? Probably to manipulate cryptocurrency markets – they largely promoted a set of crypto news sites. Is there a better way to find these things than “self-revealing tweets”, essentially, error messages. Yang tried running the content of these posts through an OpenAI tool designed to detect AI-generated text. Unfortunately, the OpenAI tool doesn’t work especially well – and OpenAI has taken the tool down. Yang saw high error rates and found people whose writing registered more bot-like than texts actually written by known bots.

The future of bot detection comes from “clear defects” and “inconsistencies”. Generative Adversarial Networks can create convincing-looking faces, but some will have serious errors, and humans can see these obvious problems. Sometimes the signals are inconsistencies – a female face with a stereotypically male name. Like the “self-revealing tweets”, these are signals that we can use to detect AI accounts.

Using signals like this, we can see where AI bots are going in the future. Yang sees evidence of reviews on Amazon, Yelp and other platforms that are using GPT to generate text and GANs to create faces. We might imagine that platforms would be working to block these manipulative behaviors and while they are, they’re also trying to embrace generative AI as a way to revive their communities. Meta is trying to create AI-powered chatbots to keep people engaged with Instagram and Messenger. And someone clever has introduced a social network entirely for AIs, no humans allowed.

The future of the AI industry, Yang tells us, is generative agents. These are systems that can receive and comprehend info, reason and make decisions and act and use tools. As these tools get more powerful, we must be conscious of the fact that not all these agents will be used for good. Identifying these rogue agents and their goals is something we will need to keep getting better at as we go forward… and right now, the bot builders are in the lead.

The post Kai-Cheng Yang: The Social Bots are Coming… appeared first on Ethan Zuckerman.