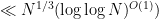

Dimitris Koukoulopolous and I have just uploaded to the arXiv our paper “A note on the mean value of the Hooley delta function“. This paper concerns a (still somewhat poorly understood) basic arithmetic function in multiplicative number theory, namely the Hooley delta function

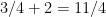

where

The function

measures the extent to which the divisors of a natural number can be concentrated in a dyadic (or more precisely,

-dyadic) interval

![{(e^u, e^{u+1}]}](https://s0.wp.com/latex.php?latex=%7B%28e%5Eu%2C+e%5E%7Bu%2B1%7D%5D%7D&bg=ffffff&fg=000000&s=0&c=20201002)

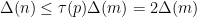

. From the pigeonhole principle, we have the bounds

where

is the usual divisor function. The statistical behavior of the divisor function is well understood; for instance, if

is drawn at random from

to

, then the mean value of

is roughly

, the median is roughly

, and (by the

Erdos-Kac theorem

asymptotically has a log-normal distribution). In particular, there are a small proportion of highly divisible numbers that skew the mean to be significantly higher than the median.

On the other hand, the statistical behavior of the Hooley delta function is significantly less well understood, even conjecturally. Again drawing  at random from

at random from  to

to  for large

for large  , the median is known to be somewhere between

, the median is known to be somewhere between  and

and  for large

for large  – a (difficult) recent result of Ford, Green, and Koukoulopolous (for the lower bound) and de la Bréteche and Tenenbaum (for the upper bound). And the mean

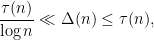

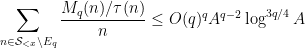

– a (difficult) recent result of Ford, Green, and Koukoulopolous (for the lower bound) and de la Bréteche and Tenenbaum (for the upper bound). And the mean  was even less well controlled; the best previous bounds were

was even less well controlled; the best previous bounds were

for any

, with the lower bound

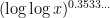

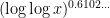

due to Hall and Tenenbaum, and the upper bound a recent result of

de la Bréteche and Tenenbaum.

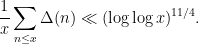

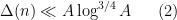

The main result of this paper is an improvement of the upper bound to

It is still unclear to us exactly what to conjecture regarding the actual order of the mean value.

The reason we looked into this problem was that it was connected to forthcoming work of David Conlon, Jacob Fox, and Huy Pham on the following problem of Erdos: what is the size of the largest subset  of

of  with the property that no non-empty subset of

with the property that no non-empty subset of  sums to a perfect square? Erdos observed that one can obtain sets of size

sums to a perfect square? Erdos observed that one can obtain sets of size  (basically by considering certain homogeneous arithmetic progressions), and Nguyen and Vu showed an upper bound of

(basically by considering certain homogeneous arithmetic progressions), and Nguyen and Vu showed an upper bound of  . With our mean value bound as input, together with several new arguments, Conlon, Fox, and Pham have been able to improve the upper bound to

. With our mean value bound as input, together with several new arguments, Conlon, Fox, and Pham have been able to improve the upper bound to  .

.

Let me now discuss some of the ingredients of the proof. The first few steps are standard. Firstly we may restrict attention to square-free numbers without much difficulty (the point being that if a number  factors as

factors as  with

with  squarefree, then

squarefree, then  ). Next, because a square-free number

). Next, because a square-free number  can be uniquely factored as

can be uniquely factored as  where

where  is a prime and

is a prime and  lies in the finite set

lies in the finite set  of squarefree numbers whose prime factors are less than

of squarefree numbers whose prime factors are less than  , and

, and  , it is not difficult to establish the bound

, it is not difficult to establish the bound

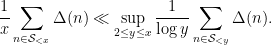

The upshot of this is that one can replace an ordinary average with a logarithmic average, thus it suffices to show

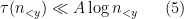

We actually prove a slightly more refined distributional estimate: for any

, we have a bound

outside of an exceptional set

which is small in the sense that

It is not difficult to get from this distributional estimate to the logarithmic average estimate

(1) (worsening the exponent

to

).

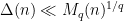

To get some intuition on the size of  , we observe that if

, we observe that if  and

and  is the factor of

is the factor of  coming from the prime factors less than

coming from the prime factors less than  , then

, then

On the other hand, standard estimates let one establish that

for all

, and all

outside of an exceptional set that is small in the sense

(3); in fact it turns out that one can also get an additional gain in this estimate unless

is close to

, which turns out to be useful when optimizing the bounds. So we would like to approximately reverse the inequalities in

(4) and get from

(5) to

(2), possibly after throwing away further exceptional sets of size

(3).

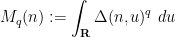

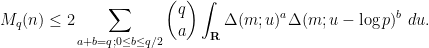

At this point we perform another standard technique, namely the moment method of controlling the supremum  by the moments

by the moments

for natural numbers

; it is not difficult to establish the bound

and one expects this bound to become essentially sharp once

. We will be able to show a moment bound

for any

for some exceptional set

obeying the smallness condition

(3) (actually, for technical reasons we need to improve the right-hand side slightly to close an induction on

); this will imply the distributional bound

(2) from a standard Markov inequality argument (setting

).

The strategy is then to obtain a good recursive inequality for (averages of)  . As in the reduction to (1), we factor

. As in the reduction to (1), we factor  where

where  is a prime and

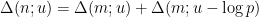

is a prime and  . One observes the identity

. One observes the identity

for any

; taking moments, one obtains the identity

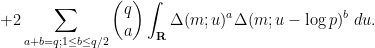

As in previous literature, one can try to average in

here and apply Hölder’s inequality. But it convenient to first use the symmetry of the summand in

to reduce to the case of relatively small values of

:

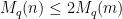

One can extract out the

term as

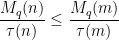

It is convenient to eliminate the factor of

by dividing out by the divisor function:

This inequality is suitable for iterating and also averaging in

and

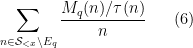

. After some standard manipulations (using the Brun–Titchmarsh and Hölder inequalities), one is able to estimate sums such as

in terms of sums such as

(assuming a certain monotonicity property of the exceptional set

that turns out to hold in our application). By an induction hypothesis and a Markov inequality argument, one can get a reasonable pointwise upper bound on

(after removing another exceptional set), and the net result is that one can basically control the sum

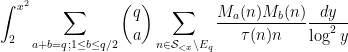

(6) in terms of expressions such as

for various

. This allows one to estimate these expressions efficiently by induction.

at random from

to

for large

, the median is known to be somewhere between

and

for large

– a (difficult) recent result of Ford, Green, and Koukoulopolous (for the lower bound) and de la Bréteche and Tenenbaum (for the upper bound). And the mean

was even less well controlled; the best previous bounds were

of

with the property that no non-empty subset of

sums to a perfect square? Erdos observed that one can obtain sets of size

(basically by considering certain homogeneous arithmetic progressions), and Nguyen and Vu showed an upper bound of

. With our mean value bound as input, together with several new arguments, Conlon, Fox, and Pham have been able to improve the upper bound to

.

factors as

with

squarefree, then

). Next, because a square-free number

can be uniquely factored as

where

is a prime and

lies in the finite set

of squarefree numbers whose prime factors are less than

, and

, it is not difficult to establish the bound

, we observe that if

and

is the factor of

coming from the prime factors less than

, then

by the moments

. As in the reduction to (1), we factor

where

is a prime and

. One observes the identity