Five Worlds of AI (a joint post with Boaz Barak)

Shtetl-Optimized 2023-04-27

Artificial intelligence has made incredible progress in the last decade, but in one crucial aspect, it still lags behind the theoretical computer science of the 1990s: namely, there is no essay describing five potential worlds that we could live in and giving each one of them whimsical names. In other words, no one has done for AI what Russell Impagliazzo did for complexity theory in 1995, when he defined the five worlds Algorithmica, Heuristica, Pessiland, Minicrypt, and Cryptomania, corresponding to five possible resolutions of the P vs. NP problem along with the central unsolved problems of cryptography.

In this blog post, we—Scott and Boaz—aim to remedy this gap. Specifically, we consider 5 possible scenarios for how AI will evolve in the future. (Incidentally, it was at a 2009 workshop devoted to Impagliazzo’s five worlds co-organized by Boaz where Scott met his now wife, complexity theorist Dana Moshkovitz. We hope civilization will continue for long enough that someone in the future could meet their soulmate, or neuron-mate, at a future workshop about our five worlds.)

Like in Impagliazzo’s 1995 paper on the five potential worlds of the difficulty of NP problems, we will not try to be exhaustive but rather concentrate on extreme cases. It’s possible that we’ll end up in a mixture of worlds or a situation not described by any of the worlds. Indeed, one crucial difference between our setting and Impagliazzo’s, is that in the complexity case, the worlds corresponded to concrete (and mutually exclusive) mathematical conjectures. So in some sense, the question wasn’t “which world will we live in?” but “which world have we Platonically always lived in, without knowing it?” In contrast, the impact of AI will be a complex mix of mathematical bounds, computational capabilities, human discoveries, and social and legal issues. Hence, the worlds we describe depend on more than just the fundamental capabilities and limitations of artificial intelligence, and humanity could also shift from one of these worlds to another over time.

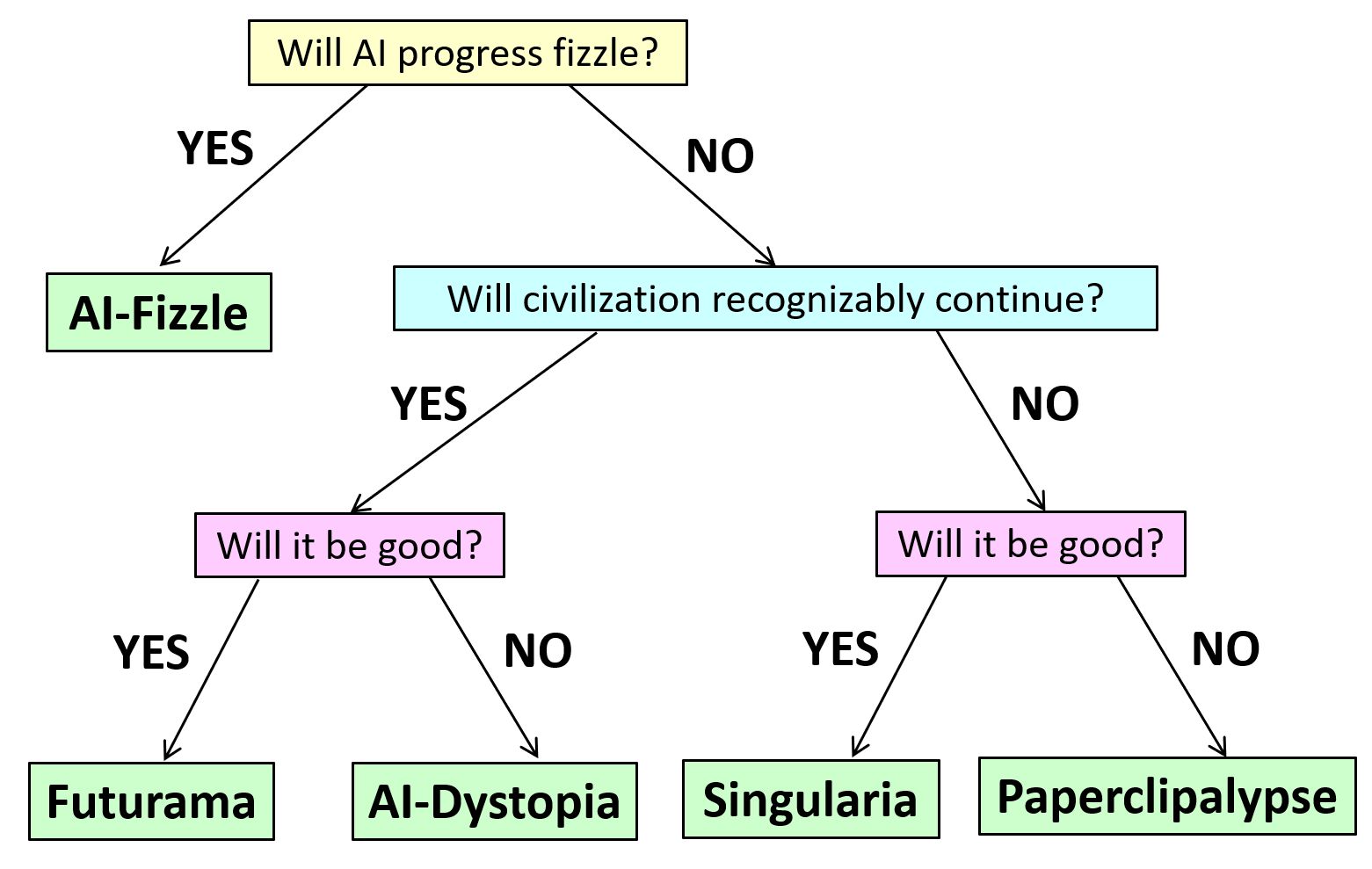

Without further ado, we name our five worlds “AI-Fizzle,” “Futurama,” ”AI-Dystopia,” “Singularia,” and “Paperclipalypse.” In this essay, we don’t try to assign probabilities to these scenarios; we merely sketch their assumptions and technical and social consequences. We hope that by making assumptions explicit, we can help ground the debate on the various risks around AI.

AI-Fizzle. In this scenario, AI “runs out of steam” fairly soon. AI still has a significant impact on the world (so it’s not the same as a “cryptocurrency fizzle”), but relative to current expectations, this would be considered a disappointment. Rather than the industrial or computer revolutions, AI might be compared in this case to nuclear power: people were initially thrilled about the seemingly limitless potential, but decades later, that potential remains mostly unrealized. With nuclear power, though, many would argue that the potential went unrealized mostly for sociopolitical rather than technical reasons. Could AI also fizzle by political fiat?

Regardless of the answer, another possibility is that costs (in data and computation) scale up so rapidly as a function of performance and reliability that AI is not cost-effective to apply in many domains. That is, it could be that for most jobs, humans will still be more reliable and energy-efficient (we don’t normally think of low wattage as being key to human specialness, but it might turn out that way!). So, like nuclear fusion, an AI which yields dramatically more value than the resources needed to build and deploy it might always remain a couple of decades in the future. In this scenario, AI would replace and enhance some fraction of human jobs and improve productivity, but the 21st century would not be the “century of AI,” and AI’s impact on society would be limited for both good and bad.

Futurama. In this scenario, AI unleashes a revolution that’s entirely comparable to the scientific, industrial, or information revolutions (but “merely” those). AI systems grow significantly in capabilities and perform many of the tasks currently performed by human experts at a small fraction of the cost, in some domains superhumanly. However, AI systems are still used as tools by humans, and except for a few fringe thinkers, no one treats them as sentient. AI easily passes the Turing test, can prove hard math theorems, and can generate entertaining content (as well as deepfakes). But humanity gets used to that, just like we got used to computers creaming us in chess, translating text, and generating special effects in movies. Most people no more feel inferior to their AI than they feel inferior to their car because it runs faster. In this scenario, people will anthropomorphize AI less over time (as happened with digital computers themselves). In “Futurama,” AI will, like any revolutionary technology, be used for both good and bad. but like prior major technological revolutions, on the whole, AI will have a large positive impact on humanity. AI will be used to reduce poverty and ensure that more of humanity has access to food, healthcare, education, and economic opportunities. The fraction of people living in democracies increases. In “Futurama,” AI systems will sometimes cause harm, but the vast majority of these failures will be due to human negligence or maliciousness. Some AI systems might be so complex that it would be best to model them as potentially behaving “adversarially,” and part of the practice of deploying AIs responsibly would be to ensure an “operating envelope” that limits their potential damage even under adversarial failures.

AI-Dystopia. The technical assumptions of “AI-Dystopia” are similar to those of “Futurama,” but the upshot could hardly be more different. Here, again, AI unleashes a revolution on the scale of the industrial or computer revolutions, but the change is markedly for the worse. AI greatly increases the scale of surveillance by government and private corporations. It causes massive job losses while enriching a tiny elite. It entrenches society’s existing inequalities and biases. And it takes away a central tool against oppression: namely, the ability of humans to refuse or subvert orders.

Interestingly, it’s even possible that the same future could be characterized as Futurama by some people and as AI-Dystopia by others–just like how some people emphasize how our current technological civilization has lifted billions out of poverty into a standard of living unprecedented in human history, while others focus on the still existing (and in some cases rising) inequalities and suffering, and consider it a neoliberal capitalist dystopia.

Singularia. Here AI breaks out of the current paradigm, where increasing capabilities require ever-growing resources of data and computation and no longer needs human data or human-provided hardware and energy to become stronger at an ever-increasing pace. AIs improve their own intellectual capabilities, including by developing new science, and (whether by deliberate design or happenstance) they act as goal-oriented agents in the physical world. They can effectively be thought of as an alien civilization–or perhaps as a new species, which is to us as we were to Homo erectus.

Fortunately, though (and again, whether by careful design or just as a byproduct of their human origins), the AIs act to us like benevolent gods and lead us to an “AI utopia.” They solve our material problems for us, giving us unlimited abundance and presumably virtual-reality adventures of our choosing. (Though maybe, as in The Matrix, the AIs will discover that humans need some conflict, and we will all live in a simulation of 2020’s Twitter, constantly dunking on one another…)

Paperclipalypse. In “Paperclipalypse” or “AI Doom,” we again think of future AIs as a superintelligent “alien race” that doesn’t need humanity for its own development. Here, though, the AIs are either actively opposed to human existence or else indifferent to it in a way that causes our extinction as a byproduct. In this scenario, AIs do not develop a notion of morality comparable to ours or even a notion that keeping a diversity of species and ensuring humans don’t go extinct might be useful to them in the long run. Rather, the interaction between AI and Homo sapiens ends about the same way that the interaction between Homo sapiens and Neanderthals ended.

In fact, the canonical depictions of such a scenario imagine an interaction that is much more abrupt than our brush with the Neanderthals. The idea is that, perhaps because they originated through some optimization procedure, AI systems will have some strong but weirdly-specific goal (a la “maximizing paperclips”), for which the continued existence of humans is, at best, a hindrance. So the AIs quickly play out the scenarios and, in a matter of milliseconds, decide that the optimal solution is to kill all humans, taking a few extra milliseconds to make a plan for that and execute it. If conditions are not yet ripe for executing their plan, the AIs pretend to be docile tools, as in the “Futurama” scenario, waiting for the right time to strike. In this scenario, self-improvement happens so quickly that humans might not even notice it. There need be no intermediate stage in which an AI “merely” kills a few thousand humans, raising 9/11-type alarm bells.

Regulations. The practical impact of AI regulations depends, in large part, on which scenarios we consider most likely. Regulation is not terribly important in the “AI Fizzle” scenario where AI, well, fizzles. In “Futurama,” regulations would be aimed at ensuring that on balance, AI is used more for good than for bad, and that the world doesn’t devolve into “AI Dystopia.” The latter goal requires anti-trust and open-science regulations to ensure that power is not concentrated in a few corporations or governments. Thus, regulations are needed to democratize AI development more than to restrict it. This doesn’t mean that AI would be completely unregulated. It might be treated somewhat similarly to drugs—something that can have complex effects and needs to undergo trials before mass deployment. There would also be regulations aimed at reducing the chance of “bad actors” (whether other nations or individuals) getting access to cutting-edge AIs, but probably the bulk of the effort would be at increasing the chance of thwarting them (e.g., using AI to detect AI-generated misinformation, or using AI to harden systems against AI-aided hackers). This is similar to how most academic experts believe cryptography should be regulated (and how it is largely regulated these days in most democratic countries): it’s a technology that can be used for both good and bad, but the cost of restricting its access to regular citizens outweighs the benefits. However, as we do with security exploits today, we might restrict or delay public releases of AI systems to some extent.

To whatever extent we foresee “Singularia” or “Paperclipalypse,” however, regulations play a completely different role. If we knew we were headed for “Singularia,” then presumably regulations would be superfluous, except perhaps to try to accelerate the development of AIs! Meanwhile, if one accepts the assumptions of “Paperclipalypse,” any regulations other than the most draconian might be futile. If, in the near future, almost anyone will be able to spend a few billion dollars to build a recursively self-improving AI that might turn into a superintelligent world-destroying agent, and moreover (unlike with nuclear weapons) they won’t need exotic materials to do so, then it’s hard to see how to forestall the apocalypse, except perhaps via a worldwide, militarily enforced agreement to “shut it all down,” as Eliezer Yudkowsky indeed now explicitly advocates. “Ordinary” regulations could, at best, delay the end by a short amount–given the current pace of AI advances, perhaps not more than a few years. Thus, regardless of how likely one considers this scenario, one might want to focus more on the other scenarios for methodological reasons alone!