Mathematics of the impossible: Computational Complexity, Chapter 3: The grand challenge

Thoughts 2023-02-02

All posts in this series. A PDF version of this post will be published with a delay, but if you’d like to have it soon let me know.

Contents

As mentioned in Chapter ??, our ability to prove impossibility results related to efficient computation appears very limited. We can now express this situation more precisely with the models we’ve introduced since then.

- in Time

on a TM, and

- in Time

on a 2-TM, and

- by circuits of size

.

Note that 2. implies 1. by Theorem ??.

In this chapter we begin to present several impossibility results, covering a variety of techniques which will be used later as well. As hinted above, they appear somewhat weak. However, jumping ahead, there is a flip side to all of this:

- At times, contrary to our intuition, stronger impossibility results are actually false. One example appears in Chapter ??. A list will be given later.

- Many times, the impossibility results that we can prove turn out to be, surprisingly, just “short” of proving major results. Here by “major result” I mean a result that would be phenomenal and that was in focus long before the connection was established. We will see several examples of this (section º??, section º??).

- Yet other times, one can identify broad classes of proof techniques, and argue that impossibility results can’t be proved with them (section º??).

Given this situation, I don’t subscribe to the general belief that stronger impossibility results are true and we just can’t prove them.

1.1 Information bottleneck: Palindromes requires quadratic time on TMs

Intuitively, the weakness of TMs is the bottleneck of passing information from one end of the tape to the other. We now show how to formalize this and use it show that deciding if a string is a palindrome requires quadratic time on TMs, which is tight and likely matches the time in Exercise ??. The same bound can be shown for other functions; palindromes just happen to be convenient to obtain matching bounds.

More precisely, for every and

, an

-state TM that decides if an

-bit input is a palindrome requires time

.

The main concept that allows us to formalize the information bottleneck mentioned above is the following.

Definition 1.1. A crossing sequence of a TM M on input and boundary

, abbreviated

-CS, is the sequence of states that

is transitioning to when crossing cell boundary

(i.e., going from Cell

to

or vice versa) during the computation on

.

The idea in the proof is very interesting. If accepts inputs

and

and those two inputs have the same

-CS for some

, then we can “stitch together” the computation of

on

and

at boundary

to create a new input

that is still accepted by

. The input

is formed by picking bits from

to the left of cell boundary

and bits from

to the right of

:

The proof that is still accepted is left as an exercise.

Now, for many problems, input should not be accepted by

, and this gives a contradiction. In particular this will be be the case for palindromes. We are going to find two palindromes

and

that have the same

-CS for some

, but the corresponding

is not a palindrome, yet it is still accepted by

. We can find these two palindromes if

takes too little time. The basic idea is that if

runs in time

, because

-CSs for different

correspond to different steps of the computation, for every input there is a value of

such that the

-CS is short, namely has length at most

. If

is much less than

, the length of this CS is much less than

, from which we can conclude that the number of CSs is much less than the number of inputs, and so we can find two inputs with the same CS.

Proof. Let be divisible by four, without loss of generality, and consider palindromes of the form

where and

is the reverse of

.

Assume there are in

and

in the middle part, i.e.,

, so that the

-CS of

on

and

is the same. Then we can define

which is not a palindrome but is still accepted by

, concluding the proof.

There remains to prove that the assumption of Theorem 1.1 implies the assumption in the previous paragraph. Suppose runs in time

. Since crossing sequences at different boundaries correspond to different steps of the computation, for every

there is a value of

in the middle part such that the

-CS of

on

has length

. This implies that there is an

in the middle s.t. there are

inputs

for which the

-CS of

on

has length

.

For fixed , the number of

-CS of length

is

.

Hence there are for which

and

have the same

-CS whenever

. Taking logs one gets

. QED

Exercise 1.1. For every and

describe an

-state TM deciding palindromes in time

(matching Theorem 1.1).

One may be tempted to think that it is not hard to prove stronger bounds for similar functions. In fact as mentioned above this has resisted all attempts!

1.2 Counting: impossibility results for non-explicit functions

Proving the existence of hard functions is simple: Just count. If there are more functions than efficient machines, some function is not efficiently computable. This is applicable to any model; next we state it for TMs for concreteness. Later we will state it for circuits.

Theorem 1.2. There exists a function that cannot be computed by a TM with

states unless

, regardless of time.

Proof. The number of TMs with states is

and each TM computes at most one function (it may compute none, if it does not stop). The number of functions on

bits is

. Hence if

some function cannot be computed. QED

Note this bound is not far from that in Exercise ??.

It is instructive to present this basic result as an application of the probabilistic method:

Proof. Let us pick uniformly at random. We want to show that

Indeed, if the probability is less than than some function exists that cannot be computed. By a union bound we can say that this probability is

where the sum is over all -state machines. Each probability in the sum is

, since

is fixed. The number of

-state machines is

. So the sum is

, and we can conclude as before taking logs. QED

1.3 Diagonalization: Enumerating machines

Can you compute more if you have more time? For example, can you write a program that runs in time and computes something that cannot be computed in time

? The answer is yes for trivial reasons if we allow for non-boolean functions.

The answer is more interesting if the functions are boolean. Such results are known as time hierarchies, and a generic technique for proving them is diagonalization, applicable to any model.

We first illustrate the result in the simpler case of partial functions, which contains the main ideas. Later we discuss total functions.

In other words, .

Proof. Consider the TM that on input

of length

runs

on

until it stops and then complements the answer. (We can use a simple encoding of these pairs, for example every even-position bit of the description of

is a

.)

Now define to be the subset of pairs s.t.

runs in time

on inputs of length

, and

.

On these inputs, runs in time

, as desired. To accomplish this,

can begin by making a copy of

in time

. Then every step of the computation of

can be simulated by

with

steps, always keeping the description of

to the left of the head.

Now suppose computes the same function as

in time

. Note that

falls in the domain

of the function, for

sufficiently large, using that

. Now consider running

on

. We have

by supposition, but

is the complement of

, contradiction. QED

This proof is somewhat unsatisfactory; in particular we have no control on the running time of on inputs not in

. It is desirable to have a version of this fundamental result for total functions. Such a version is stated next. It requires additional assumptions on

and a larger gap between the running times. Perhaps surprisingly, as we shall discuss, both requirements are essential.

Theorem 1.4. Let be a function. Suppose that

is in

.

There is a total function in that cannot be computed by any TM

in time

.

The assumption about is satisfied by all standard functions, including all those in this book. (For example, take

. The corresponding

is then

. To compute

on input

of

bits we can first compute

in time

(Exercise ??). This is a number of

bits. We can then square this number in time

. Note that the time to compute

is dominated by the

term coming from computing

, which does not depend on

and is much less than the

in the assumption.) The assumption cannot be removed altogether because there exist pathological functions

for which the result is false.

The proof is similar to that of Theorem 1.3. However, to make the function total we need to deal with arbitrary machines, which may not run in the desired time or even stop at all. The solution is to clock in a manner similar to the proof of the universal machine, Lemma ??.

Also, we define a slightly different language to give a stronger result – a unary language – and to avoid some minor technical details (the possibility that the computation of erases the input).

We define a TM that on input

obtains a description of a TM

, computes

, and then simulates

on input

for

steps in a way similar to Lemma ??, and if

stops then

outputs the complement of the output of

; and if

does not stop then

stops and outputs anything. Now

computes a function in time about

. We argue that this function cannot be computed in much less time as follows. Suppose some TM

computes the function in time somewhat less than

. Then we can pick an

for which

obtains the description of this

, and the simulation always stops. Hence, for that

we would obtain

, which is a contradiction.

However, there are interesting differences with the simulation in Lemma ??. In that lemma the universal machine was clocking the steps of the machine

being simulated. Now instead we need to clock the steps of

itself, even while

is parsing the description of

to compute its transition function. This is necessary to guarantee that

does not waste time on big TM descriptions.

Whereas in Lemma ?? the tape was arranged as

it will now be arranged as

which is parsed as follows. The description of is

,

is in state

, the tape of

contains

and the head is on the left-most symbol of

. The integer

is the counter decreased at every step

Proof. Define TM that on input

:

- Compute

.

- Compute

. Here

is obtained from

by removing all left-most zeroes until the first

. I.e., if

then

. This is similar to the fact that a program does not change if you add, say, empty lines at the bottom.

- Simulate

on

, reducing the counter

at every step, including those parsing

, as explained before.

- If

stops before the counter reaches

, output the complement of its output. If the counter reaches

stop and output anything.

Running time of .

- Computing

is similar to Exercise ??. By assumption

is computable in time

. Our definition of computation allows for erasing the input, but we can keep

around spending at most another

factor. Thus we can compute

in time

. Finally, in case it was erased, we can re-compute

in time

by keeping a counter (initialized to a copy of

).

- This takes time

: simply scan the input and remove zeroes.

- Decreasing the counter takes

steps. Hence this simulation will take

time.

Overall the running time is .

Proof that the function computed by requires much time. Suppose some TM

computes the same function as

. Consider inputs

where

. Parsing the description of

to compute its transition function takes time

, a value that depends on

only and not on

. Hence

will simulate

steps of

. If

stops within that time (which requires

to be larger than

, and so

and

sufficiently large compared to

) then the simulation terminates and we reach a contradiction as explained before. QED

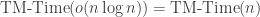

The extra factor cannot be reduced because of the surprising result presented in Theorem 1.5 showing that, on TMs, time

equals time

for computing total functions.

However, tighter time hierarchies hold for more powerful models, like RAMs. Also, a time hierarchy for total functions for is… an open problem! But a hierarchy is known for partial functions.

Exercise 1.4. (1) State and prove a tighter time hierarchy for Time (which recall corresponds to RAMs) for total functions. You don’t need to address simulation details, but you need to explain why a sharper separation is possible.

(2) Explain the difficulty in extending (1) to .

(3) State and prove a time hierarchy for for partial functions.

1.3.1

In this subsection we prove the result in the title, which we also mentioned earlier. First let us state the result formally.

Note that time is barely enough to scan the input. Indeed, the corresponding machines in Theorem 1.5 will only move the head in one direction.

The rest of this section is devoted to proving the above theorem. Let be a machine for

witnessing the assumption of the theorem. We can assume that

stops on every input (even though our definition of time only applies to large enough inputs), possibly by adding

to the time, which does not change the assumption on

. The theorem now follows from the combination of the next two lemmas.

Proof. For an integer let

be a shortest input such that there exists

for which the

-CS in the computation of

on

has length

. Let

.

There are tape boundaries within or bordering

. If we pick a boundary uniformly at random, the average length of a CS on

is

. Hence there are

choices for

s.t. the

-CS on

has length

.

The number of such crossing sequences is

Hence, the same crossing sequence occurs at positions

, using that

is large enough.

Of these four, one could be the CS of length from the definition of

. Of the other three, two are on the same side of

. We can remove the corresponding interval of the input without removing the CS of length

. Hence we obtained a shorter input with a CS of length

. Contradiction. QED

Lemma 1.2. Suppose is computable by a TM such that on every input

, every

-CS with

has length

. Then

is computable in time

by a TM with

states that only moves the head in one direction.

1.4 Circuits

The situation for circuits is similar to that of 2-TMs, and by Theorem ?? we know that proving bounds on circuits is harder than for 2-TMs. Even a bound of

is unknown. The following is a circuit version of Theorem 1.2. Again, the bound is close to what we saw in Theorem ??.

Theorem 1.6. [3] There are functions that require circuits of size

, for every

.

One can prove a hierarchy for circuit size, by combining Theorem 1.6 and Theorem ??. As stated, the results give that circuits of size compute more functions than those of size

. In fact, the “

” in the theorems is small, so one can prove a sharper result. But a stronger and more enjoyable argument exists.

Theorem 1.7. [Hierarchy for circuit size] For every and

there is a function

that can be computed by circuits of size

but not by circuits of size

.

Proof. Consider a path from the all-zero function to a function which requires circuits of size , guaranteed to exist by Theorem 1.6, changing the output of the function on one input at each step of the path. Let

be the first function that requires size

, and let

be the function right before that in the path. Note that

has circuits of size

, and

can be computed from

by changing the value on a single input. The latter can be implemented by circuits of size

. QED

Exercise 1.7. Prove a stronger hierarchy result for alternating circuits, where the “” in Theorem 1.7 is replaced with “

.”

In fact, this improvement is possible even for (non aternating) circuits, see Problem 1.2.

1.4.1 The circuit won’t fit in the universe: Non-asymptotic, cosmological results

Most of the results in this book are asymptotic, i.e., we do not explicitly work out the constants because they become irrelevant for larger and larger input lengths. As stated, these results don’t say anything for inputs of a fixed length. For example, any function on bits is in Time

.

However, it is important to note that all the proofs are constructive and one can work out the constants, and produce non-asymptotic results. We state next one representative example when this was done. It is about a problem in logic, an area which often produces very hard problems.

On an alphabet of size , the language used to write formulas includes first-order variables that range over

, second-order variables that range over finite subsets of

, the predicates “

” and “

” where

and

denote first-order variables and

denotes a set variable, and standard quantifiers, connectives, constants, binary relation symbols on integers, and set equality. For example one can write things like “every finite set has a maximum:”

Theorem 1.8. [4] To decide the truth of logical formulas of length at most in this language requires a circuit containing at least

gates. So even if each gate were the size of a proton, the circuit would not fit in the known universe.

Their result applies even to randomized circuits with error , if

is replaced with

. (We can define randomized circuits analogously to BPTime.)

1.5 Problems

Problem 1.1. Hierarchy Theorem 1.4 only gives a function that cannot be computed fast on all large enough input lengths: it is consistent with the theorem that

can be computed fast on infinitely many input lengths.

Give a function mapping

to

that is computable in time

but such that for any TM

running in time

the following holds. For every

and every

such that

.

Hint: Note the range of . Can this result hold as stated with range

?

Problem 1.2. Replace “” in Theorem 1.7 with “

.”

This shows Theorem 1.5 is tight, and gives an explicit problem witnessing the time-hierarchy separation in Theorem 1.4, for a specific time bound. For the negative result, don’t use pumping lemmas or other characterization results not covered in this book.

Problem 1.4. The following argument contradicts Theorem 1.4; what is wrong with it?

References

[1] F. C. Hennie. One-tape, off-line turing machine computations. Information and Control, 8(6):553–578, 1965.

[2] Kojiro Kobayashi. On the structure of one-tape nondeterministic turing machine time hierarchy. Theor. Comput. Sci., 40:175–193, 1985.

[3] Claude E. Shannon. The synthesis of two-terminal switching circuits. Bell System Tech. J., 28:59–98, 1949.

[4] Larry Stockmeyer and Albert R. Meyer. Cosmological lower bound on the circuit complexity of a small problem in logic. J. ACM, 49(6):753–784, 2002.