How NASA got an Android handset ready to go into space

Ars Technica » Scientific Method 2013-03-23

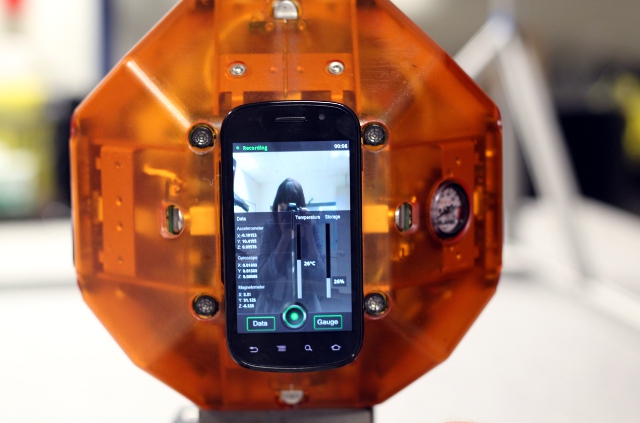

It’s what science fiction dreams are made of: brightly colored, sphere-shaped robots that float above the ground, controlled by a tiny computer brain. But it isn't fiction: it’s the SPHERES satellite, and its brain is an Android smartphone.

Two and a half years ago, the Human Exploration and Telerobotics Project (HET) equipped a trio of these floating robots with Nexus S handsets running Android Gingerbread. (SmartSPHERES, which stands for “Synchronized Position Hold, Engage, Reorient, Experimental Satellites” is a project at NASA's Ames Research Center that's funded by HET). Despite their name, these SPHERES aren't traditional satellites—they're currently being used inside the International Space Station (ISS) to investigate applications like telerobotic cameras and high-latency control, and to measure sound and radiation levels.

More generally, the Android phones will help HET test out news ways of sensing and modeling the ISS so that robots can eventually become an integral part of the space station's operations. Space exploration is still largely a human-controlled operation, but by equipping each of these self-contained satellites with their own Nexus S, the team has enabled them to navigate autonomously while researchers provide high-level commands from Earth. There are currently two Nexus S smartphones at work in the ISS right now.

Read 18 remaining paragraphs | Comments