Among the new phrases…

Language Log 2024-10-25

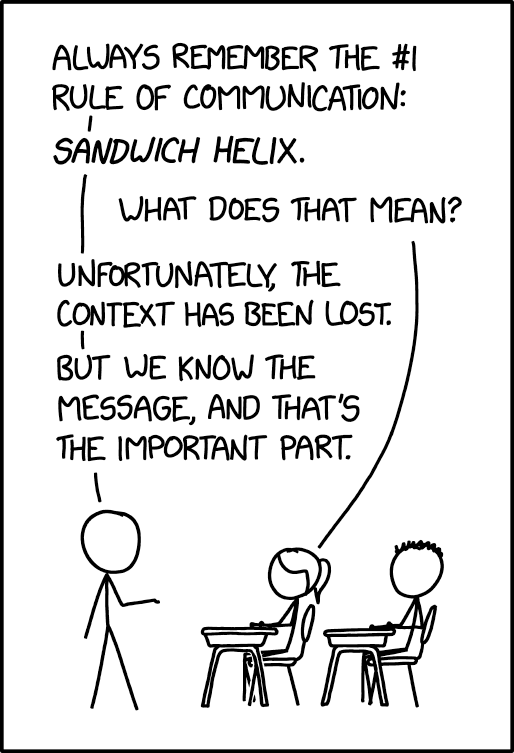

Today's Tank McNamara:

According to the NFL, a "hip drop tackle" "occurs when a defender wraps up a ball carrier and rotates or swivels his hips, unweighting himself and dropping onto ball carrier’s legs during the tackle". And I would have more or less guessed that meaning, before getting the authoritative definition.

A Sandwich Helix, on the other hand…

…seems to have been invented to represent an uninterpretable two-word sequence (though maybe I'm missing something?). The mouseover title for that xkcd strip is "The number one rule of string manipulation is that youâ€ ve got to specify your encodings", which is plausible but doesn't exactly help.

ve got to specify your encodings", which is plausible but doesn't exactly help.

There are some sandwich-helix-type things in the biochemistry literature, FWIW, where in particular helix-heme-helix sandwiches have been discussed at length; but I doubt Randall Munroe had that in mind.

My failed attempt to decode "sandwich helix" reminds me of a recently-proposed AI evaluation — Nicholas Riccardi et al. "The Two Word Test as a semantic benchmark for large language models", 2024:

Abstract: Large language models (LLMs) have shown remarkable abilities recently, including passing advanced professional exams and demanding benchmark tests. This performance has led many to suggest that they are close to achieving humanlike or “true” understanding of language, and even artificial general intelligence (AGI). Here, we provide a new open-source benchmark, the Two Word Test (TWT), that can assess semantic abilities of LLMs using two-word phrases in a task that can be performed relatively easily by humans without advanced training. Combining multiple words into a single concept is a fundamental linguistic and conceptual operation routinely performed by people. The test requires meaningfulness judgments of 1768 noun-noun combinations that have been rated as meaningful (e.g., baby boy) or as having low meaningfulness (e.g., goat sky) by human raters. This novel test differs from existing benchmarks that rely on logical reasoning, inference, puzzle-solving, or domain expertise. We provide versions of the task that probe meaningfulness ratings on a 0–4 scale as well as binary judgments. With both versions, we conducted a series of experiments using the TWT on GPT-4, GPT-3.5, Claude-3-Optus, and Gemini-1-Pro-001. Results demonstrated that, compared to humans, all models performed relatively poorly at rating meaningfulness of these phrases. GPT-3.5-turbo, Gemini-1.0-Pro-001 and GPT-4-turbo were also unable to make binary discriminations between sensible and nonsense phrases, with these models consistently judging nonsensical phrases as making sense. Claude-3-Opus made a substantial improvement in binary discrimination of combinatorial phrases but was still significantly worse than human performance. The TWT can be used to understand and assess the limitations of current LLMs, and potentially improve them. The test also reminds us that caution is warranted in attributing “true” or human-level understanding to LLMs based only on tests that are challenging for humans.

Of course, there are lots of word combinations that are opaque unless you're in the know, like "fog computing".