GIST: Now with local step size adaptation for NUTS

Statistical Modeling, Causal Inference, and Social Science 2024-08-16

After 12 years, we’ve finally figured out how to do NUTS-like local step-size adaptation for NUTS that preserves detailed balance. This is starting from Michael Betancourt’s revised multinomial NUTS, as used in Stan, with a biasing toward the last doubling.

We just released the arXiv paper, and would love to get feedback on it.

- Nawaf Bou-Rabee, Bob Carpenter, Tore Selland Kleppe, Milo Marsden. 2024. Incorporating Local Step-Size Adaptivity into the No-U-Turn Sampler using Gibbs Self Tuning. arXiv 2408.08259.

Nawaf just gave a talk summarizing our work on GIST at the Bernoulli conference in Germany and I gave a talk on it at Sam Livingstone’s London workshop in June.

It works!

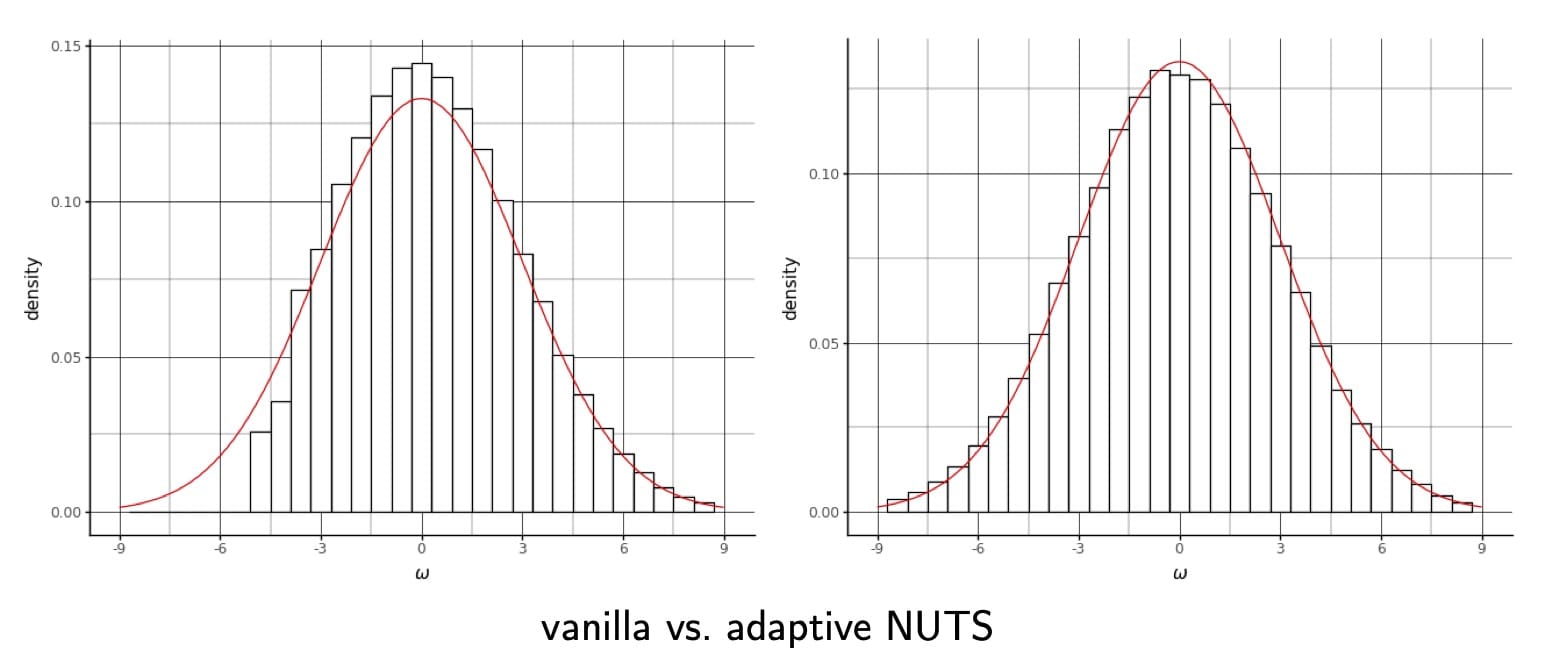

Here’s a plot from the paper showing the marginal draws from the log scale parameter of Neal’s funnel in both our new local stepwise adapting GIST and Stan’s implementation of NUTS.

Notice that NUTS isn’t getting into the neck of the funnel where the log scale parameter is low—this would’ve been easier to see with shaded histogram fill, so we should fix that for the revised paper.

The idea behind GIST

In GIST, we couple the tuning parameters (step size, number of steps, mass matrix) with the position and momentum. We do this in the same way as HMC itself coupled momentum with position. Specifically, we design a conditional distribution of tuning parameters given position and momentum. We then resample the tuning parameters in a Gibbs step similarly to how we resample the momentum. The Hamiltonian (in this case NUTS) component is then a simple Metropolis-within-Gibbs step (like in vanilla HMC). Unlike in HMC, where the momentum distribution is independent of the position distribution, in GIST we have a dependency between tuning parameters and position/momentum, so we require a non-trivial Metropolis-Hastings step (unlike NUTS). The trick is in designing a conditional distribution of tuning parameters that does the right local adaptation and leads to a high acceptance rate—that’s what the papers show how to do.

It might be easier to start with the first GIST paper, which introduces the framework and shows that NUTS, apogee-to-apogee, randomized, and multinomial HMC can be framed as GIST samplers, as well as introducing a simpler U-turn-based alternative to NUTS.

- Nawaf Bou-Rabee, Bob Carpenter, Milo Marsden. 2024. GIST: Gibbs self-tuning for locally adaptive Hamiltonian Monte Carlo. arXiv 2404.15253.

Mass matrix adaptation anyone?

I have a proof-of-concept working that does local mass matrix adaptation, and while it works nearly perfectly to precondition multivariate normals, I haven’t been able to get it to work to locally precondition non-log-concave targets like Neal’s funnel.

Reproducible code

Code to reproduce the paper results and also for our ongoing experiments is available in our public GitHub repo,

Delayed rejection (generalized) HMC

This is following on from some other work I did with collaborators here at Flatiron Institute on local step size adaptation using delayed rejection for Hamiltonian Monte Carlo,

- Chirag Modi, Alex Barnett, Bob Carpenter. 2024. Delayed rejection Hamiltonian Monte Carlo for sampling multiscale distributions. Bayesian Analysis 19(3).

We recently expanded this work use generalized HMC, which is much more efficient and enables more “local” step size adaptation than what we do globally in GIST and delayed rejection HMC,

- Gilad Turok, Chirag Modi, Bob Carpenter. 2024. Sampling From Multiscale Densities With Delayed Rejection Generalized Hamiltonian Monte Carlo. arXiv 2406.02741.

Chirag is extending the DR-G-HMC work with GIST and an efficient L-BFGS-like approach to estimating mass matrix. This seems to work better than what I’ve tried purely within GIST. Stay tuned!

Job candidates

Milo Marsden, who did a lot of the heavy living on the GIST papers, is a Stanford applied math Ph.D. student who’s graduating this year and looking for a postdoc or faculty position.

Gilad Turok, who took the lead on the DR-G-HMC paper, was an undergrad intern from Columbia University applied math who stuck around as a research analyst at Flatiron this year. He’s looking to apply to grad school in comp stats/ML next year. Keep an eye out for our Blackjax package implementing Agrawal and Domke’s approach to realNVP normalizing flow VI.