PNAS GIGO QRP WTF: This meta-analysis of nudge experiments is approaching the platonic ideal of junk science

Statistical Modeling, Causal Inference, and Social Science 2022-01-07

Nick Brown writes:

You might enjoy this… Some researchers managed to include 11 articles by Wansink, including the “bottomless soup” study, in a meta-analysis in PPNAS.

Nick links to this post from Aaron Charlton which provides further details.

The article in question is called “The effectiveness of nudging: A meta-analysis of choice architecture interventions across behavioral domains,” so, yeah, this pushes several of my buttons.

But Nick is wrong about one thing. I don’t enjoy this at all. It makes me very sad.

An implausibly large estimate of average effect size

Let’s take a look. From the abstract of the paper:

Our results show that choice architecture interventions [“nudging”] overall promote behavior change with a small to medium effect size of Cohen’s d = 0.45 . . .

Wha . . .? An effect size of 0.45 is not “small to medium”; it’s huge. Huge as in implausible that these little interventions would shift people, on average, by half a standard deviation. I mean, sure, if the data really show this, then it would be notable—it would be big news—because it’s a huge effect.

Why does this matter? Who cares if they label massive effects as “small to medium”? It’s important because it’s related to expectations and, from there, to the design and analysis of experiments. If you think that a half-standard-deviation effect size is “small to medium,” i.e. reasonable, then you might well design studies to detect effects of that size. Such studies will be super-noisy to the extent that they can pretty much only detect effects of that size or larger; then at the analysis stage researchers are expecting to find large effects, so though forking paths they find them and through selection that’s what gets published, leading to a belief from those who read the literature that this is how large the effects really are . . . it’s an invidious feedback loop.

There are various ways to break the feedback loop of noisy designs, selection, and huge reported effect sizes. One way to cut the link is by preregistration and publishing everything; another is less noisy studies (I’ll typically recommend better measurements and within-person designs); another is to critically examine the published literature in aggregate (as in the work of Gregory Francis, Uri Simonsohn, Ulrich Schimmack, and others); another is to look at what went wrong in particular studies (as in the work of Nick Brown, Carol Nickerson, and others); one can study selection bias (as in the work of John Ioannidis and others); and yet another step is to think more carefully about effect sizes and recognize the absurdity of estimates of large and persistent average effects (recall the piranha problem).

The claim of an average effect of 0.45 standard deviations does not, by itself, make the article’s conclusions wrong—it’s always possible that such large effects exist—but it’s a bad sign, and labeling it as “small to medium” points to a misconception that reminds us of the process whereby these upwardly biased estimates get published.

What goes into the sausage? Pizzagate . . . and more

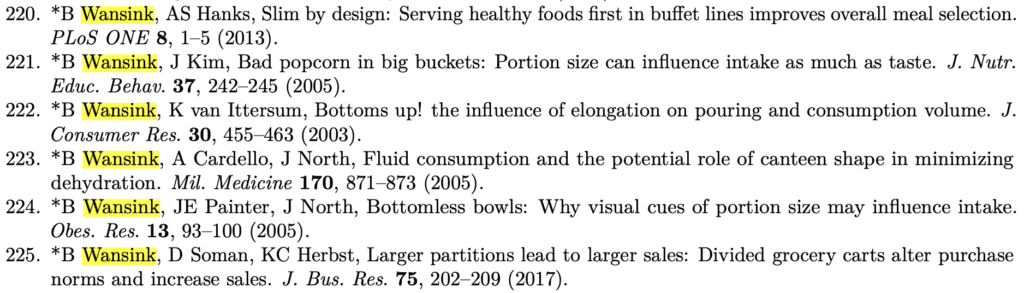

What about the Wansink articles? Did this meta-analysis, published in the year 2022, really make use of 11 articles authored or coauthored by that notorious faker? Ummm, yes, it appears the answer to that question is Yes:

![]()

![]()

![]()

I can see how the authors could’ve missed this. The meta-analysis makes use of 219 articles (citations 16 through 234 in the supplementary material). It’s a lot of work to read through 219 articles. The paper has only 4 authors, and according to the Author contributions section, only two of them performed research. If each of these authors went through 109 or 110 papers . . . that’s a lot! It was enough effort just to read the study descriptions and make sure they fit with the nudging theme, and to pull out the relevant effect sizes. I can see how they might never have noticed the authors of the articles, or spent time to do Google or Pubpeer searches to find our if any problems had been flagged.

Similarly, I can see how the PNAS reviewers could’ve missed the 11 Wansink references, as they were listed deep in the Supplementary Information appendix to the paper. Who ever reads the supplementary information, right?

The trouble is, once this sort of thing is published and publicized, who goes back and checks anything? Aaron Charlton and Nick Brown did us a favor with their eagle eyes, reading the paper with more care than its reviewers or even its authors. Post-publication peer review ftw once more!

Also check out this from the article’s abstract:

Food choices are particularly responsive to choice architecture interventions, with effect sizes up to 2.5 times larger than those in other behavioral domains.

They didn’t seem to get the point that, with noisy studies, huge effect size estimates are not an indicator of huge effects; they’re an indication that the studies are too noisy to be useful. And that doesn’t even get into the possibility that the original studies are fraudulent.

But then I got curious. If this new paper cites the work of Wansink, would it cite any other embarrassments from the world of social psychology? The answer is a resounding Yes!:

![]()

This article was retracted in a major scientific scandal that still isn’t going away.

The problems go deeper than any one (or 12) individual studies

Just to be clear: I would not believe the results of this meta-analysis even if it did not include any of the above 12 papers, as I don’t see any good reason to trust the individual studies that went into the meta-analysis. It’s a whole literature of noisy data, small sample sizes, and selection on statistical significance, hence massive overestimates of effect sizes. This is not a secret: look at the papers in question and you will see, over and over again, that they’re selecting what to report based on whether the p-value is less than 0.05. The problem here is not the p-value—I’d have a similar issue if they were to select on whether the Bayes factor is greater then 3, for example—; rather, the problem is the selection, which induces noise (through the reduction of continuous data to a binary summary) and bias (by not allowing small effects to be reported at all).

Another persistent source of noise and bias is forking paths: selection of what analyses to perform. If researchers were performing a fixed analyses and reporting results based on a statistical significance filter, that would’ve been enough to induce huge biases here. But, knowing that only the significant results will count, researchers are also free to choose the details of their data coding and analysis to get these low p-values (see general discussions of researcher degrees of freedom, forking paths, and the multiverse), leading to even more bias.

In short, the research method used in this subfield of science is tuned to yield overconfident overestimates. And when you put a bunch of overconfident overestimates into a meta-analysis . . . you end up with an overconfident overestimate.

In that case, why mention the Wansink and Ariely papers at all? Because this indicates the lack of quality control of this whole project—it just reminds us of the attitude, unfortunately prevalent in so much of academia, that once something is published in a peer-reviewed journal, it’s considered to be a brick of truth in the edifice of science. That’s a wrong attitude!

If an estimate produced in the lab or in the field is noisy and biased, then it’s still noisy and biased after being published. Indeed, publication can exacerbate the bias. The decision in this article to include of multiple publications by the entirely untrustworthy Wansink and an actually retracted paper by the notorious Ariely is just an example of this more general problem of taking published estimates at face value.

P.S. It doesn’t make me happy to criticize this paper, written by four young researchers who I’m sure are trying their best to do good science and to help the world.

So, you might ask, why do we have to be so negative? Why can’t we live and let live, why not celebrate the brilliant careers that can be advanced by publications in top journals, why not just be happy for these people?

The answer is, as always, that I care. As I wrote a few years ago, psychology is important and I have a huge respect for many psychology researchers. Indeed I have a huge respect for much of the research within statistics that has been conducted by psychologists. And I say, with deep respect for the field, that it’s bad news that its leaders publicize work that has fatal flaws.

It does not make me happy to point this out, but I’d be even unhappier to not point it out. It’s not just about Ted talks and NPR appearances. Real money—real resources—get spent on “nudging.” Brian Wansink alone got millions of dollars of corporate and government research funds and was appointed to a government position. The U.K. government has an official “Nudge Unit.” So, yeah, go around saying these things have huge effect sizes, that’s kind of an invitation to waste money and to do these nudges instead of other policies. That concerns me. It’s important! And the fact that this is all happening in large part because of statistical errors, that really bothers me. As a statistician, I feel bad about it.

And I want to convey this to the authors and audience of the sort of article discussed above, not to slam them but to encourage them to move on. There’s so much interesting stuff to discover about the world. There’s so much real science to do. Don’t waste your time on the fake stuff! You can do better, and the world can use your talents.

P.P.S. I’d say it’s kind of amazing that the National Academy of Sciences published a paper that was so flawed, both in its methods and its conclusions—but, then again, they also published the papers on himmicanes, air rage, ages ending in 9, etc. etc. They have their standards. It’s a sad reflection on the state of the American science establishment.

P.P.P.S. Usually I schedule these with a 6-month lag, but this time I’m posting right away (bumping our scheduled post for today, “At last! Incontrovertible evidence (p=0.0001) that people over 40 are older, on average, than people under 40.”), in the desperate hope that if we can broadcast the problems with this article right away, we can reduce its influence. A little nudge on our part, one might say. Two hours of my life wasted. But in a good cause.

Let me put it another way. I indeed think that “nudging” has been oversold, but the underlying idea—“choice architecture” or whatever you want to call it—is important. Defaults can make a big difference sometimes. It’s because I think the topic is important that I’m especially disappointed when it gets the garbage-in, garbage-out junk science treatment. The field can do better, and an important step in this process of doing better is to learn from its mistakes.