Exploring pre-registration for predictive modeling

Statistical Modeling, Causal Inference, and Social Science 2023-12-06

This is Jessica. Jake Hofman, Angelos Chatzimparmpas, Amit Sharma, Duncan Watts, and I write:

Amid rising concerns of reproducibility and generalizability in predictive modeling, we explore the possibility and potential benefits of introducing pre-registration to the field. Despite notable advancements in predictive modeling, spanning core machine learning tasks to various scientific applications, challenges such as data-dependent decision-making and unintentional re-use of test data have raised questions about the integrity of results. To help address these issues, we propose adapting pre-registration practices from explanatory modeling to predictive modeling. We discuss current best practices in predictive modeling and their limitations, introduce a lightweight pre-registration template, and present a qualitative study with machine learning researchers to gain insight into the effectiveness of pre-registration in preventing biased estimates and promoting more reliable research outcomes. We conclude by exploring the scope of problems that pre-registration can address in predictive modeling and acknowledging its limitations within this context.

Pre-registration is no silver bullet to good science, as we discuss in the paper and later in this post. However, my coauthors and I are cautiously optimistic that adapting the practice could help address a few problems that can arise in predictive modeling pipelines like research on applied machine learning. Specifically, there are two categories of concerns where pre-specifying the learning problem and strategy may lead to more reliable estimates.

First, most applications of machine learning are evaluated using predictive performance. Usually we evaluate this on held-out test data, because it’s too costly to obtain a continuous stream of new data for training, validation and testing. The separation is crucial: performance on held-out test data is arguably the key criterion in ML, so making reliable estimates of it is critical to avoid a misleading research literature. If we mess up and access the test data during training (test set leakage), then the results we report are overfit. It’s surprisingly easy to do this (see e.g., this taxonomy of types of leakage that occur in practice). While pre-registration cannot guarantee that we won’t still do this anyway, having to determine details like how exactly features and test data will be constructed a priori could presumably help authors catch some mistakes they might otherwise make.

Beyond test set leakage, other types of data-dependent decisions threaten the validity of test performance estimates. Predictive modeling problems admit many degrees-of-freedom that authors can (often unintentionally) exploit in the interest of pushing the results in favor of some a priori hypothesis, similar to the garden of forking paths in social science modeling. For example, researchers may spend more time tuning their proposed methods than baselines they compare to, making it look like their new method is superior when it is not. They might report on straw man baselines after comparing test accuracy across multiple variations. They might only report the performance metrics that make test performance look best. Etc. Our sense is that most of the time this is happening implicitly: people end up trying harder for the things they are invested in. Fraud is not the central issue, so giving people tools to help them avoid unintentionally overfitting is worth exploring.

Whenever the research goal is to provide evidence on the predictability of some phenomena (Can we predict depression from social media? Can we predict civil war onset? etc.) there’s a risk that we exploit some freedoms in translating the high level research goal to a specific predictive modeling exercise. To take an example my co-authors have previously discussed, when predicting how many re-posts a social media post will get based on properties of the person who originally posted, even with the dataset and model specification held fixed, exercising just a few degrees of freedom can change the qualitative nature of the results. If you treat it as a classification problem and build a model to predict whether a post will receive at least 10 re-posts, you can get accuracy close to 100%. If you treat it as a regression problem and predict how many re-posts a given post gets without any data filtering, R^2 hovers around 35%. The problem is that only a small fraction of posts exceed the threshold of 10 re-posts, and predicting which posts do—and how far they spread—is very hard. Even when the drift in goal happens prior to test set access, the results can paint an overly optimistic picture. Again pre-registering offers no guarantees of greater construct validity, but it’s a way of encouraging authors to remain aware of such drift.

The specific proposal

One challenge we run into in applying pre-registration to predictive modeling is that because we usually aren’t aiming for explanation, we’re willing to throw lots of features into our model, even if we’re not sure how they could meaningfully contribute, and we’re agnostic to what sort of model we use so long as its inductive bias seems to work for our scenario. Deciding the model class ahead of time as we do in pre-registering explanatory models can be needlessly restrictive. So, the protocol we propose has two parts.

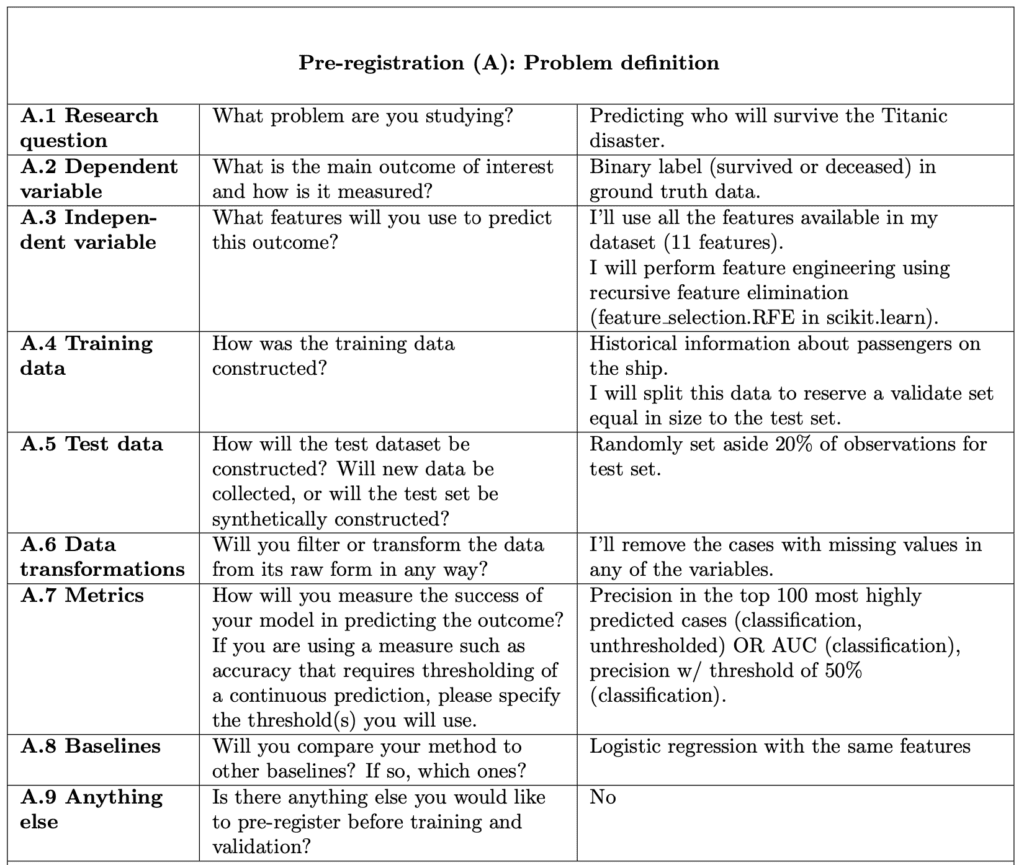

First, prior to training, one answers the following questions, which are designed to be addressable before looking at any correlations between features and outcomes

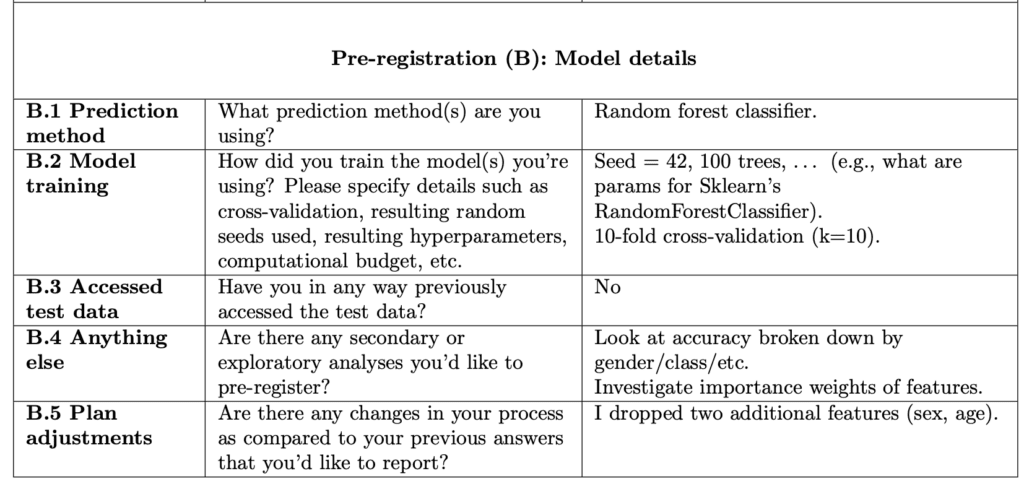

Then, after training and validation but before accessing test data, one answers the remaining questions:

Authors who want to try it can grab the forms by forking this dedicated github repo and include them in their own repository.

What we’ve learned so far

To get a sense of whether researchers could benefit from this protocol, we observed as six ML Ph.D. students applied it to a prediction problem we provided (predicting depression in teens using responses to the 2016 Monitoring the Future survey of 12th graders, subsampled from data used by Orben and Przybylski). This helped us see where they struggled to pre-specify decisions in phase 1, presumably because doing so was out of line with their usual process of figuring some things out as they conducted model training and validation. We had to remind several to be specific about metrics and data transformations in particular.

We also asked them in an exit interview what else they might have tried if their test performance had been lower than they expected. Half of the six participants described procedures that if not fully reported, seemed likely to compromise the validity of their test estimates (things like going back to re-tune hyperparameters then trying again on test data). This suggests that there’s an opportunity for pre-registration, if widely adopted, to play a role in reinforcing good workflow. This may be especially useful in fields where ML models are being applied by expertise in predictive modeling is still sparse.

The caveats

It was reassuring to directly observe examples where this protocol, if followed, might have prevented overfitting. However, the fact that we saw these issues despite having explained and motivated pre-registration during these sessions, and walked the participants through it, suggests that pre-specifying certain components of a learning pipeline alone is not necessarily enough to prevent overfitting.

It was also notable that while all of the participants but one saw value in pre-registering, their specific understandings of why and how it could work varied. There was as much variety in their understandings of pre-registration as there was in ways they approached the same learning problem. Pre-registration is not going to be the same thing to everyone nor even used the same way, because the ways it helps are multi-faceted. As a result, it’s dangerous to interpret the mere act of pre-registration as a stamp of good science.

I have some major misgivings about putting too much faith into the idea that publicly pre-registering guarantees that estimates are valid, and hope that this protocol gets used responsibly, as something authors choose to do because they feel it helps them prevent unintentional overfitting rather than the simple solution that guarantees to the world that your estimates are gold. It was nice to observe that a couple of study participants seemed particularly drawn to the idea of pre-registering based on perceived “intrinsic” value, remarking about the value they saw in it as a personally-imposed set of constraints to incorporate in their typical workflow.

It won’t work for all research projects. One participant figured out while talking aloud that prior work he’d done identifying certain behaviors in transformer models would have been hard to pre-register because it was exploratory in nature.

Another participant fixated on how the protocol was still vulnerable: people could lie about not having already experimented with training and validation, there’s no guarantee that the train/test split authors describe is what they actually used to produce their estimates, etc. Computer scientists tend to be good at imagining loopholes that adversarial attacks could exploit, so maybe they will be less likely to oversell pre-registration as guaranteeing validity. At the end of the day, it’s still an honor system.

As we’ve written before, part of the issue with many claims in ML-based research is that often performance estimates for some new approach represent something closer to best case performance due to overlooked degrees of freedom, but they can get interpreted as expected performance. Pre-registration is an attempt at ensuring that the estimates that get reported are more likely to be represent what they’re meant to be. Maybe it’s better though to try to change readers’ perceptions that they can be taken at face value to begin with, though. I’m not sure.

We’re open to feedback on the specific protocol we provide and curious to hear how it works out for those who try it.

P.S. Against my better judgment, I decided to go to NeurIPS this year. If you want to chat pre-registration or threats to the validity of ML performance estimates find me there Wed through Sat.