Estimating Reliability – Psychometrics

R-bloggers 2013-04-03

(This article was first published on Econometrics by Simulation, and kindly contributed to R-bloggers)

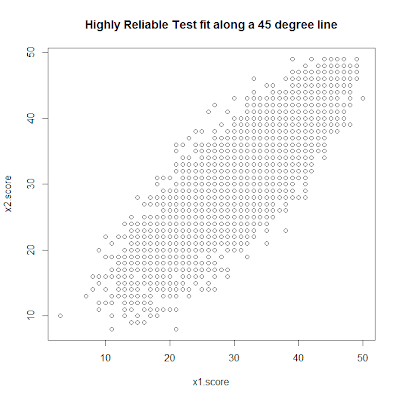

# Each item has a item characteristic curve (ICC) of: PL3 = function(theta,a, b, c) c+(1-c)*exp(a*(theta-b))/(1+exp(a*(theta-b))) # Let's imagine we have a test with parameters a,b,c nitems = 50 test.a = rnorm(nitems)*.2+1 test.b = rnorm(nitems) test.c = runif(nitems)*.3 # I classical test theory we have the assumption that total variance of the test results is equal to the variance of the true scores (defined as the expected outcome of the test for a given subject) plus measurement error [Var(X) = Var(T(theta)) + Var(E)]. # We "know" that classical test theory is wrong because we know that the error must be a function of ability (theta) since we know that no matter how good (or bad) a student is, the student will never get above the maximum (or below the minimum) score of the test. # Let's generate the student pool. nstud = 5000 stud.theta = rnorm(nstud) # First let's generate our expected outcomes by student. prob.correct = matrix(NA, ncol=nitems, nrow=nstud) for (i in 1:nitems) prob.correct[,i]=PL3(stud.theta, test.a[i], test.b[i], test.c[i]) # Generate true scores true.score = apply(prob.correct, 1, sum) # Generate a single sample item responses for test 1. x1.responses = matrix(runif(nitems*nstud), ncol=nitems, nrow=nstud) # Generate total scores x1.score = apply(x1.responses, 1, sum) # The test sampling seems to be working correctly cor(true.score, x1.score) # Let's sample a parrellel test x2.responses = matrix(runif(nitems*nstud), ncol=nitems, nrow=nstud) # Generate total scores x2.score = apply(x2.responses, 1, sum) # Classical reliability is defined as the correlation between two parrellel test scores (I think): rel.XX = cor(x1.score, x2.score) # Looks like our estimated reliability is about .84 or .85 plot(x1.score, x2.score, main="Highly Reliable Test fit along a 45 degree line") # In theory we can find the reliability by performing the following calculation: # rho = var(T)/var(x1) rel.TX = var(true.score)/var(x1.score) # This seems to give an estiamte that varies between .79 and .88 # Alternatively according to theory rho = 1 - var(E)/var(X) E = true.score-x1.score rel.EX =1-var(E)/var(x1.score) # Interestingly, this seems to produce a different estimate which is between .82 and .85 typically. # An alternative reliability estimate is the Kuder-Richardson Formula 20 # K-R20= k/k-1 * (1-sum(p*q)/var(x1)) # With k defined as number of items # and p probability correct with q = 1-p # First let's calculate the estimated p by item. P = apply(x1.responses, 2, mean) Q = 1-P # Now for the K-R20 rel.KR20 = nitems/(nitems-1)*(1-sum(P*Q)/var(x1.score)) # This estimate seems to be between .84 and .86 # In order to calculate the IRT approximations of the classical test theory reliability we first need to calculate information. # I = p*q when d=1 and p and q are the true probabilities of getting the correct answer. # Which is equivalent to the prob correct on each item I = prob.correct*(1-prob.correct) # Test information can be found as the sum of the information x1.I = apply(I, 1, sum) # The unconditional standard error estimate. SE = 1/x1.I^.5 # Average (unconditional) standard error uSE = mean(SE) # An IRT reliability estimate Thissen (2000) could be formed as: rel.IRT = 1-uSE^2 # This estimate is around .89. However, I have not estimated the student abilities which might lead to a different level of reliability if I needed to. rel.XX rel.EX rel.TX rel.KR20 rel.IRT

# Each item has a item characteristic curve (ICC) of: PL3 = function(theta,a, b, c) c+(1-c)*exp(a*(theta-b))/(1+exp(a*(theta-b))) # Let's imagine we have a test with parameters a,b,c nitems = 50 test.a = rnorm(nitems)*.2+1 test.b = rnorm(nitems) test.c = runif(nitems)*.3 # I classical test theory we have the assumption that total variance of the test results is equal to the variance of the true scores (defined as the expected outcome of the test for a given subject) plus measurement error [Var(X) = Var(T(theta)) + Var(E)]. # We "know" that classical test theory is wrong because we know that the error must be a function of ability (theta) since we know that no matter how good (or bad) a student is, the student will never get above the maximum (or below the minimum) score of the test. # Let's generate the student pool. nstud = 5000 stud.theta = rnorm(nstud) # First let's generate our expected outcomes by student. prob.correct = matrix(NA, ncol=nitems, nrow=nstud) for (i in 1:nitems) prob.correct[,i]=PL3(stud.theta, test.a[i], test.b[i], test.c[i]) # Generate true scores true.score = apply(prob.correct, 1, sum) # Generate a single sample item responses for test 1. x1.responses = matrix(runif(nitems*nstud), ncol=nitems, nrow=nstud) # Generate total scores x1.score = apply(x1.responses, 1, sum) # The test sampling seems to be working correctly cor(true.score, x1.score) # Let's sample a parrellel test x2.responses = matrix(runif(nitems*nstud), ncol=nitems, nrow=nstud) # Generate total scores x2.score = apply(x2.responses, 1, sum) # Classical reliability is defined as the correlation between two parrellel test scores (I think): rel.XX = cor(x1.score, x2.score) # Looks like our estimated reliability is about .84 or .85 plot(x1.score, x2.score, main="Highly Reliable Test fit along a 45 degree line") # In theory we can find the reliability by performing the following calculation: # rho = var(T)/var(x1) rel.TX = var(true.score)/var(x1.score) # This seems to give an estiamte that varies between .79 and .88 # Alternatively according to theory rho = 1 - var(E)/var(X) E = true.score-x1.score rel.EX =1-var(E)/var(x1.score) # Interestingly, this seems to produce a different estimate which is between .82 and .85 typically. # An alternative reliability estimate is the Kuder-Richardson Formula 20 # K-R20= k/k-1 * (1-sum(p*q)/var(x1)) # With k defined as number of items # and p probability correct with q = 1-p # First let's calculate the estimated p by item. P = apply(x1.responses, 2, mean) Q = 1-P # Now for the K-R20 rel.KR20 = nitems/(nitems-1)*(1-sum(P*Q)/var(x1.score)) # This estimate seems to be between .84 and .86 # In order to calculate the IRT approximations of the classical test theory reliability we first need to calculate information. # I = p*q when d=1 and p and q are the true probabilities of getting the correct answer. # Which is equivalent to the prob correct on each item I = prob.correct*(1-prob.correct) # Test information can be found as the sum of the information x1.I = apply(I, 1, sum) # The unconditional standard error estimate. SE = 1/x1.I^.5 # Average (unconditional) standard error uSE = mean(SE) # An IRT reliability estimate Thissen (2000) could be formed as: rel.IRT = 1-uSE^2 # This estimate is around .89. However, I have not estimated the student abilities which might lead to a different level of reliability if I needed to. rel.XX rel.EX rel.TX rel.KR20 rel.IRT To leave a comment for the author, please follow the link and comment on his blog: Econometrics by Simulation.

R-bloggers.com offers daily e-mail updates about R news and tutorials on topics such as: visualization (ggplot2, Boxplots, maps, animation), programming (RStudio, Sweave, LaTeX, SQL, Eclipse, git, hadoop, Web Scraping) statistics (regression, PCA, time series,ecdf, trading) and more...