Mapping every IPv4 address

R-bloggers 2014-09-15

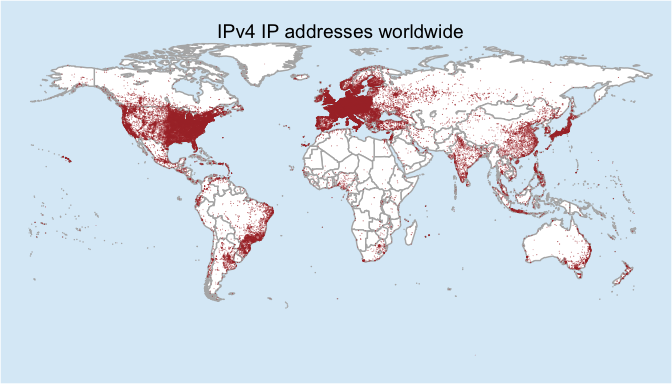

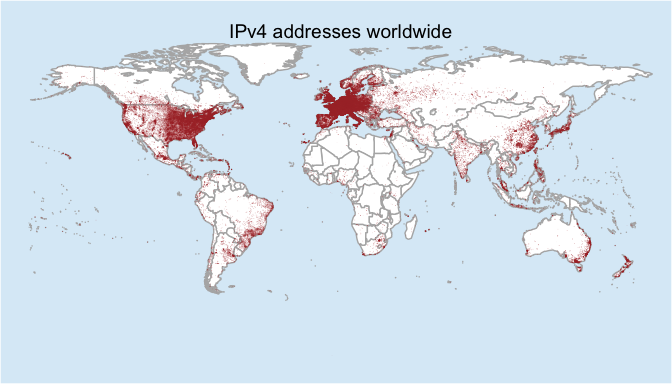

During July I was working with a commercial data source that providesextra data around IP addresses and it dawned on me: rather than pingingbillions of IP addresses and creatingmap,I could create a map from all the geolocation data I had at my fingertips. At a high level I could answer “Where are all the IPv4 addressesworldwide?” But in reality what I created was a map communicating “Wheredoes the geo-location services think all the IPv4 address areworldwide?” And at the end of July I put together a plot in about anhour and tossed it onto twitter. It is still getting retweets over amonth later in spite of the redundancy in the title.

Bob and I have talked quite a bit before about the (questionable) valueof maps and how they can be eye-catching, but they often lack thesubstance to communicate a clear message. The problem may be compoundedwhen IP geolocation is the data source for maps. Hopefully I can pointout some of the issues in this post as we walk through how to gather andmap every IPv4 address in the world.

Step 2: Get the data

I already did step 1 by defining our goal and as a question it is,“Where does the geo-location service think all the ipv4 addresses areworldwide?” Step 2 then is getting data to support our research. When Icreated the original map I used data from a commercial geolocationservice. Since most readers won’t have a subscription, we can referenceMaxmind and their free geolocationdata. Start bydownloading the “GeoLite City” database inCSV/zipformat (28meg download) and unzip it to get the“GeoLiteCity-Location.csv” file. Since the first line of the CSV is acopyright statement, you have to read it in and skip 1 line. Becausethis is quite a bit file, you should leverage the data.table commandfread()

library(data.table)geo <- fread("data/GeoLiteCity-Location.csv", header=T, skip=1)# how many rows?geoRows <- nrow(geo)print(geoRows)## [1] 557986

Right away here, you can see some challenges with IP geolocation. Thereare around 4.2 billion total IP address, 3.7 billion are routable (halfa billion are reserved) and yet the data only has a total of 557,986unique rows. It’s probably a safe bet to say some of these may beaggregated together.

You can jump right to a map here and plot the latitude/longitude in thatfile, but to save processing time, you can remove duplicate points withthe unique function. Then load up a world map, and plot the points on it.

geomap1 <- unique(geo, by=c("latitude", "longitude"))library(maps)library(ggplot2)# load the worldworld_map<-map_data("world")# strip off antartica for aestheticsworld_map <- subset(world_map, region != "Antarctica") # sorry penguins# set up the plot with the map datagg <- ggplot(world_map)# now add a map layergg <- gg + geom_map(dat=world_map, map = world_map, aes(map_id=region), fill="white", color="gray70")# and the ip geolocation pointsgg <- gg + geom_point(data=geomap1, aes(longitude, latitude), colour="#AA3333", alpha=1/10, size=0.5)# basic themegg <- gg + theme_bw()# show the mapprint(gg)

That’s interesting, and if you notice the alpha on the points is set to1/10th, meaning it will take ten point on top of one another to make thecolor solid (red in this case). One thing we didn’t do though is accountfor the density of the IP addresses. Some of those points may havethousands while others may have just a handful and the map doesn’t showthat. In order to account for that you have to load up the other file inthe zip file, the GeoLiteCity-Blocks file and merge it with the firstfile loaded.

blocks <- fread("data/GeoLiteCity-Blocks.csv", header=T, skip=1)# these columns are read is as character for some reason# make them numericblocks <- blocks[, lapply(.SD, as.numeric)]fullgeo <- merge(blocks, geo, all=TRUE, by="locId") # "all" is important here# trim out the columns we needfullgeo <- fullgeo[ ,c(2,3,8,9), with=FALSE]# set column names that are easier to typesetnames(fullgeo, c("begin", "end", "latitude", "longitude"))# look at the dataprint(fullgeo)## begin end latitude longitude## 1: NA NA 0.00 0.000## 2: 2.655e+08 2.655e+08 35.00 105.000## 3: 2.655e+08 2.655e+08 35.00 105.000## 4: 5.408e+08 5.408e+08 35.00 105.000## 5: 5.870e+08 5.870e+08 35.00 105.000## --- ## 2428090: 3.646e+09 3.646e+09 52.52 13.400## 2428091: 3.647e+09 3.647e+09 52.88 9.683## 2428092: 3.735e+09 3.735e+09 -39.93 175.050## 2428093: 3.735e+09 3.735e+09 -41.30 174.783## 2428094: 3.730e+09 3.730e+09 24.67 118.458

What you are looking at here is four columns, the begining and endingaddress in an IP block with the latitude and longitude of that block.The IP addresses are stored in long format, which is both easier to workwith and smaller for memory/storage of the data. We’ll make use of thelong format in a bit, for now we still have more clean up to do. Noticethe first line where begin and end are both NA? That either meansthere were empty values in the CSV or the merge command didn’t have amatching record for that location ID and because you set all to truein the merge command above, it filled in the row with NA’s. The defaultbehavior is to drop any rows that aren’t in both tables, but we overrodethat by setting all=TRUE. We could take care of these NA’s butremoving the all from the merge command and accept the default ofFALSE for all. But this is interesting, because in our first plot wejust took all the latitude and longitude and plotted them… how manydon’t have corresponding IP address blocks?

sum(is.na(fullgeo$begin))## [1] 430051

430 thousand orphaned locations? That seems like a lot of unassociatedlat/long pairs, doesn’t it?

But keep going, you’ll want to do two more things with this data: 1)count the number of IP’s in each block and 2) total up the number ofIP’s for each location. In order to do that efficiently from both a codeand time perspective we’ll leverage dplyr. Let’s clean up the NA’swhile we are at it.

library(dplyr)# tbl_dt loads a data.table object into dplyr# and the %>% is the "pipe" command to send the output# to the next command.finalgeo <- tbl_dt(fullgeo) %>% filter(!is.na(begin)) %>% # remove the NA's. mutate(count = end - begin + 1) %>% # count the # of IPs group_by(latitude, longitude) %>% # aggregate by unique lat and long summarise(ipcount=sum(count)) # add up all counts# what do we have?print(finalgeo)## Source: local data table [105,304 x 3]## Groups: latitude## ## latitude longitude ipcount## 1 -90.00 0.00 4419## 2 -54.80 -68.30 6560## 3 -54.16 -36.72 5## 4 -53.87 -67.78 1280## 5 -53.79 -67.71 8960## 6 -53.67 -68.47 8## 7 -53.33 -68.95 24## 8 -53.15 -70.92 6152## 9 -51.75 -59.00 1154## 10 -51.73 -72.52 256## .. ... ... ...

Notice how we have 105,304 rows? That’s a far cry from the 557,986 rowswe had in the original latitude/longitude pairings you mapped.

Explore the data

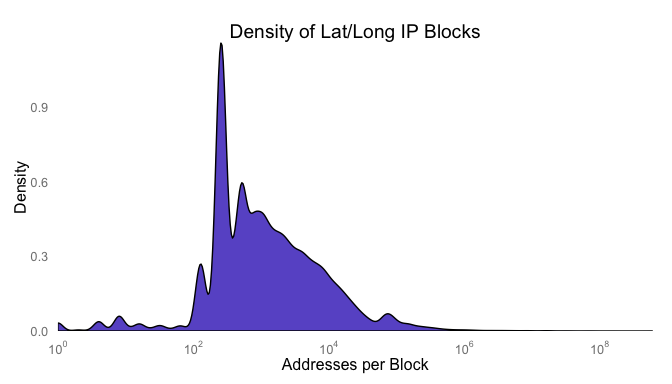

What does the distribution of the counts look like? Chances are goodthere is a heavy skew to the data. To create a plot where you can seethe distribution, you’ll have to change the axis showing thedistribution of addresses per lat/long pair to a logorithmic scale.

library(scales)gg <- ggplot(finalgeo, aes(x=ipcount))gg <- gg + geom_density(fill="slateblue")gg <- gg + scale_x_log10("Addresses per Block", expand=c(0,0), breaks = trans_breaks("log10", function(x) 10^x), labels = trans_format("log10", math_format(10^.x)))gg <- gg + scale_y_continuous("Density", expand=c(0,0))gg <- gg + ggtitle("Density of Lat/Long IP Blocks")gg <- gg + theme(axis.ticks=element_blank(), panel.grid=element_blank(), panel.background=element_rect(color=NA, fill=NA))print(gg)

I would guess that the spikes are around and we can check by convertingthe count field to a factor and running summary against it.

summary(factor(finalgeo$ipcount), maxsum=10)## 256 512 128 768 1024 384 1280 640 1536 ## 21905 8744 5142 4583 3543 3019 2154 1936 1545 ## (Other) ## 52733

While that’s interesting, it’s not surprising or all that informative.

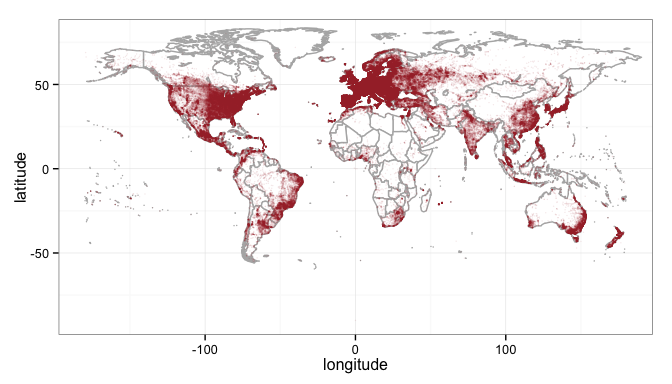

Back to the map, right now you have three variables, the latitude,longitude and count of addreses at the location. Lat and long are easyenough, those are points on a map. How do represent density at thatpoint? I think there are three viable options: color (hue), size oropacity (color brightness). In my original plot, I leverage the alphasetting on the points. Trying to use hue would just get jumbled togethersince at the world view many of the points overlap and individual colorswould be impossible to see. with a hundred thousand+ points, size alsowill overlap and be indistinguishable.

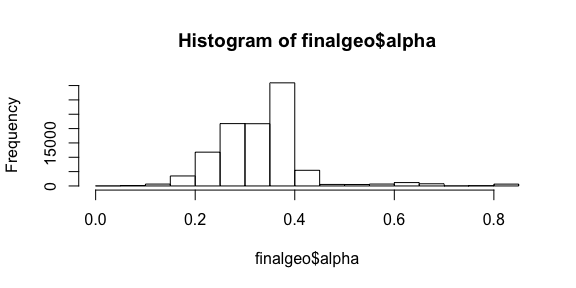

Since all we want to see is the relative density over the entire map,the reader won’t care if there is a lot of IP addresses at a point or awhole lot of IP addresses at point. Showing density is what’s important,so let’s use the alpha (opacity) setting of the point to show density.The alpha setting is a value between 0 and 1, and our counts are largenumbers with a heavy skew. To wrangle the range into an alpha setting weshould first take the log of the count and then scale it between 0 and1. I chose to apply log twice to shift the skew the distribution so mostof the values are less than 0.5. Since the points overlap, this shouldmake a nice range of opacity for the points.

temp <- log(log(finalgeo$ipcount+1)+1)finalgeo$alpha <- (max(temp)-temp)/max(temp)hist(finalgeo$alpha)

And now let’s map those!

world_map<-map_data("world")world_map <- subset(world_map, region != "Antarctica") # inteRcouRse AntaRcticagg <- ggplot(world_map)gg <- gg + geom_map(dat=world_map, map = world_map, aes(map_id=region), fill="white", color="gray70")gg <- gg + geom_point(data=finalgeo, aes(longitude, latitude), colour="#AA3333", alpha=finalgeo$alpha, size=0.3)gg <- gg + scale_x_continuous(expand=c(0,0))gg <- gg + scale_y_continuous(expand=c(0,0))gg <- gg + expand_limits(x = world_map$long, y = world_map$lat)gg <- gg + ggtitle("IPv4 addresses worldwide")gg <- gg + theme(axis.text=element_blank(), axis.title=element_blank(), legend.position="none", axis.ticks=element_blank(), panel.grid=element_blank(), panel.background=element_rect(color=NA, fill=NA), plot.background=element_rect(fill="#A0CFEC66"))gg

And there you have it! There are several tweaks that could be done tothis. If you notice in this final map, I set the point size to be 0.3.If you raise that up you can create a map that is very dense with colorand the size of the point is relative to the size of the output plot. Ifyou export at 4x6 a point size of 0.3 may be huge, but they may barelyshow up if you export at 15x20. There is no set formula and you can playaround with the values, but just be sure the final product stays asclose to the data as possible.

A Final Thought

We talked about the “Potwin effect” in our book and Bob mentioned it inhisStatebinsblog post as well. But if you notice some of the lat/long pairs arerounded off to whole integers. That may be a good indication that theonly thing known about the geolocation of the IP address is the country.Further work may be to remove or otherwise account for the uncertainpoints by matching. Chances are good they are the only lat/long pairsthat are both whole numbers throughout the data.

R-bloggers.com offers daily e-mail updates about R news and tutorials on topics such as: visualization (ggplot2, Boxplots, maps, animation), programming (RStudio, Sweave, LaTeX, SQL, Eclipse, git, hadoop, Web Scraping) statistics (regression, PCA, time series, trading) and more...