AI Learning Design Workshop: The Trickiness of AI Bootcamps and the Digital Divide

e-Literate 2023-10-02

As readers of this series know, I’ve developed a six-session design/build workshop series for learning design teams to create an AI Learning Design Assistant (ALDA). In my last post in this series, I provided an elaborate ChatGPT prompt that can be used as a rapid prototype that everyone can try out and experiment with.1 In this post, I’d like to focus on how to address the challenges of AI literacy effectively and equitably.

We’re in a tricky moment with generative AI. In some ways, it’s as if writing has just been invented, but printing presses are already everywhere. The problem of mass distribution has already been solved. But nobody’s invented the novel yet. Or the user manual. Or the newspaper. Or the financial ledger. We don’t know what this thing is good for yet, either as producers or as consumers. We don’t know how, for example, the invention of the newspaper will affect the ways in which we understand and navigate the world.

And, as with all technologies, there will be haves and have-nots. We tend to talk about economic and digital divides in terms of our students. But the divide among educational institutions (and workplaces) can be equally stark and has a cascading effect. We can’t teach literacy unless we are literate.

This post examines the literacy challenge in light of a study published by Harvard Business School and reported on by Boston Consulting Group (BCG). BCG’s report and the original paper are both worth reading because they emphasize different findings. But the crux is the same:

- Using AI does enhance the productivity of knowledge workers.

- Weaker knowledge workers improve more than stronger ones.

- AI is helpful for some kinds of tasks but can actually harm productivity for others.

- Training workers in AI can hurt rather than help their performance if they learn the wrong lessons from it.

The ALDA workshop series is intended to be a kind of AI literacy boot camp. Yes, it aspires to deliver an application that solves a serious institutional process by the end. But the real, important, lasting goal is literacy in techniques that can improve worker performance while avoiding the pitfalls identified in the study.

In other words, the ALDA BootCamp is a case study and an experiment in literacy. And, unfortunately, it also has implications for the digital divide due to the way in which it needs to be funded. While I believe it will show ways to scale AI literacy effectively, it does so at the expense of increasing the digital divide. I will address that concern as well.

The study

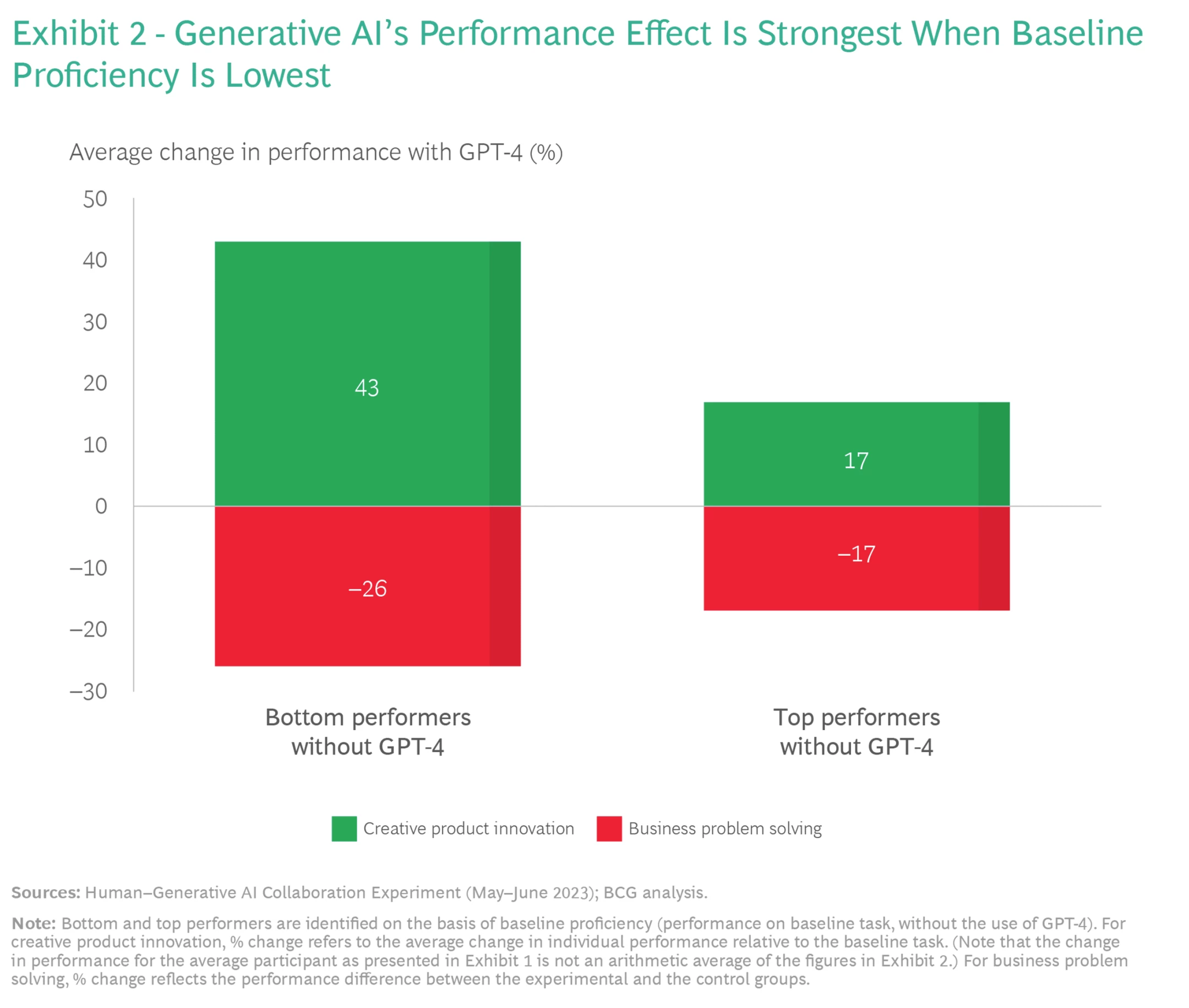

The headline of the study is that AI usage increased the performance of consultants—especially less effective consultants—on “creative tasks” while decreasing their performance on “business tasks.” The study, in contrast, refers to “frontier” tasks, meaning tasks that generative AI currently does well, and “outside the frontier” tasks, meaning the opposite. While the study provides the examples used, it never clearly defines the characteristics of what makes a task “outside the frontier.” (More on that in a bit.) At any rate, the studies show gains for all knowledge workers on a variety of tasks, with particularly impressive gains from knowledge workers in the lower half of the range of work performance:

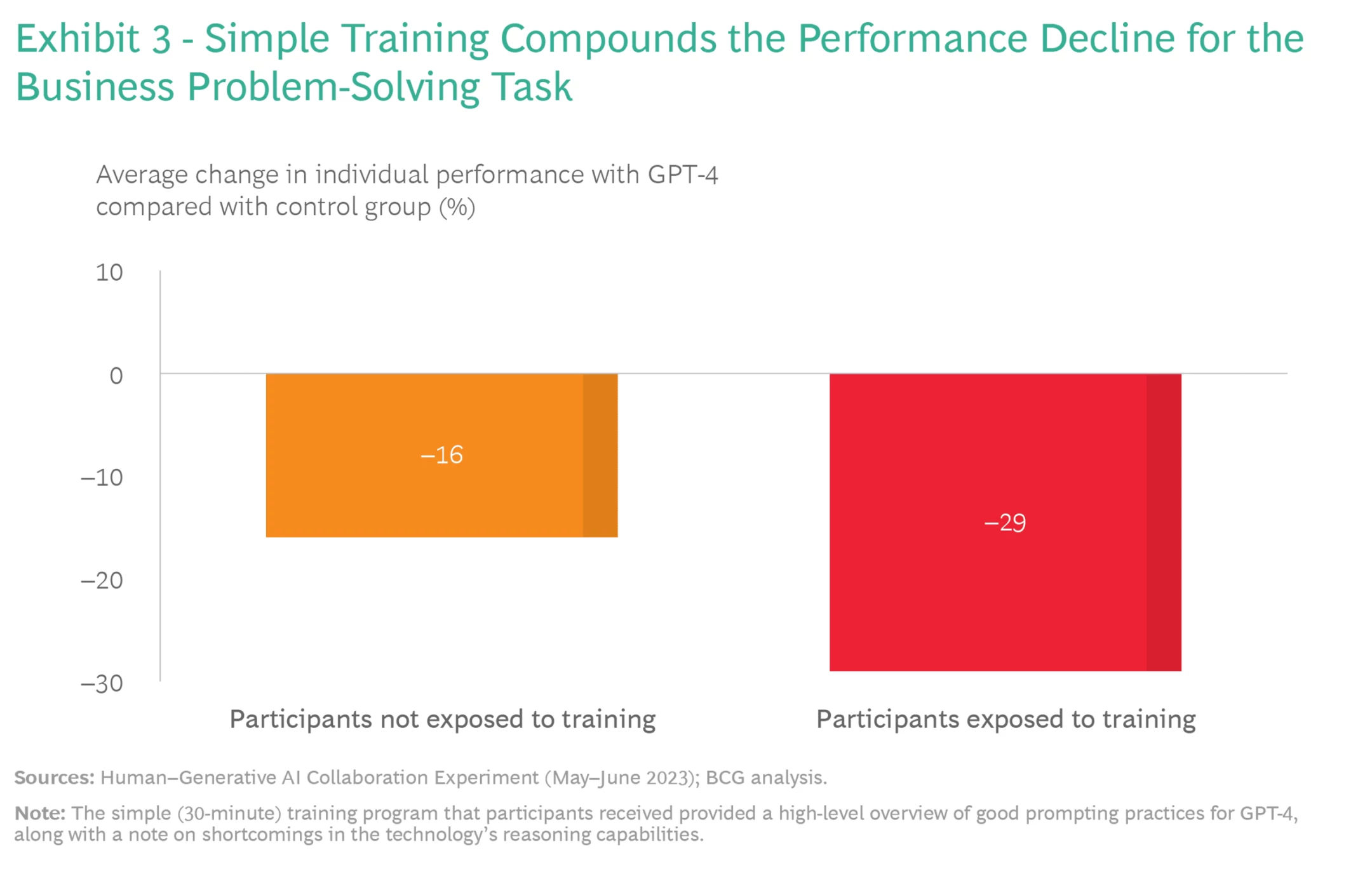

As I said, we’ll get to the red part in a bit. Let’s focus on the performance gains and, in particular, the ability for ChatGPT to equalize performance gains among workers:

Looking at these graphs reminds me of the benefits we’ve seen from adaptive learning in the domains where it works. Adaptive learning can help many students, but it is particularly useful in helping students who get stuck. Once they are helped, they tend to catch up to their peers in performance. This isn’t quite the same since the support is ongoing. It’s more akin to spreadsheet formulas for people who are good at analyzing patterns in numbers (like a pro forma, for example) but aren’t great at writing those formulas.

The bad news

For some tasks, AI made the workers worse. The paper refers to these areas as outside “the jagged frontier.” Why “jagged?” While the authors aren’t explicit, I’d say that (1) the boundaries of AI capabilities are not obviously or evenly bounded, (2) the boundary moves as the technology evolves, and (3) it can be hard to tell even in the moment which side of the boundary you’re on. On this last point, the BCG report highlights that some training made workers perform worse. They speculate it might be because of overconfidence.

What are those tasks in the red zone of the study? The Harvard paper gives us a clue that has implications for how we approach teaching AI literacy. They write:

In our study, since AI proved surprisingly capable, it was difficult to design a task in this experiment outside the AI’s frontier where humans with high human capital doing their job would consistently outperform AI. However, navigating AI’s jagged capabilities frontier remains challenging. Even for experienced professionals engaged in tasks akin to some of their daily responsibilities, this demarcation is not always evident. As the boundaries of AI capabilities continue to expand, often exponentially, it becomes incumbent upon human professionals to recalibrate their understanding of the frontier and for organizations to prepare for a new world of work combining humans and AI.

Navigating the Jagged Technological Frontier: Field Experimental Evidence of the Effects of AI on Knowledge Worker Productivity and Quality

The experimental conditions that the authors created suggest to me that challenges can arise from critical context or experience that is not obviously missing. Put another way, the AI may perform poorly on synthetic thinking tasks that are partly based on experience rather than just knowledge. But that’s both a guess and somewhat beside the point. The real issue is that AI makes knowledge workers better except when it makes them worse, and it’s hard to know what it will do in a given situation.

The BCG report includes a critical detail that I believe is likely related to the problem of the invisible jagged frontier:

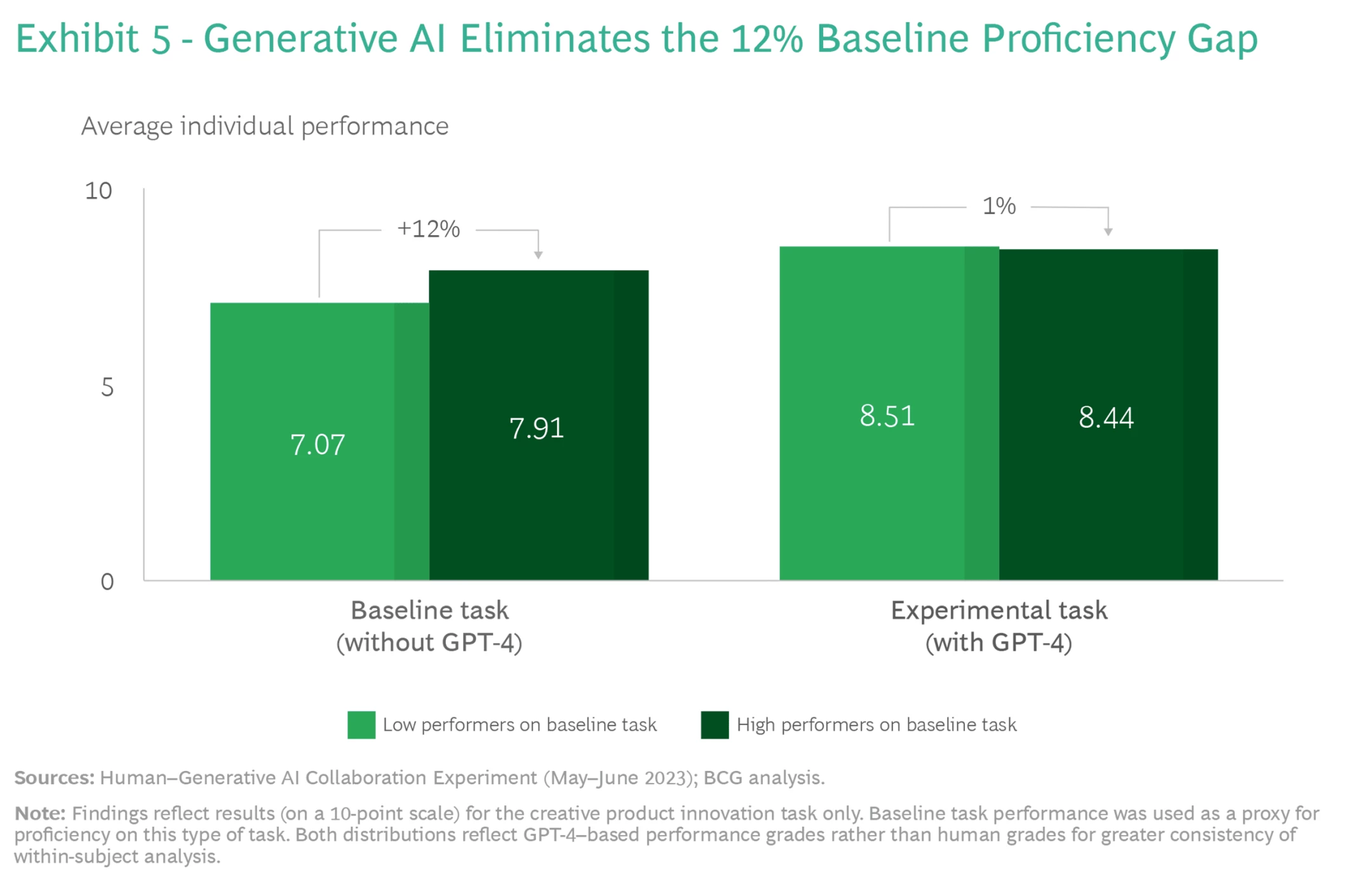

The strong connection between performance and the context in which generative AI is used raises an important question about training: Can the risk of value destruction be mitigated by helping people understand how well-suited the technology is for a given task? It would be rational to assume that if participants knew the limitations of GPT-4, they would know not to use it, or would use it differently, in those situations.

Our findings suggest that it may not be that simple. The negative effects of GPT-4 on the business problem-solving task did not disappear when subjects were given an overview of how to prompt GPT-4 and of the technology’s limitations….

Even more puzzling, they did considerably worse on average than those who were not offered this simple training before using GPT-4 for the same task. (See Exhibit 3.) This result does not imply that all training is ineffective. But it has led us to consider whether this effect was the result of participants’ overconfidence in their own abilities to use GPT-4—precisely because they’d been trained.

How People Create—And Destroy—Value With Generative AI

BCG speculates this may be due to overconfidence, which is a reasonable guess. If even the experts don’t know when the AI will perform poorly, then the average knowledge worker should be worse than the experts at predicting. If the training didn’t improve their intuitions about when to be careful, then it could easily exacerbate a sense of overconfidence.

Let’s be clear about what this means: The AI prompt engineering workshops you’re conducting may actually be causing your people to perform worse rather than better. Sometimes. But you’re not sure when or how often.

While I don’t have a confident answer to this problem, the ALDA project will pilot a relatively novel approach to it.

Two-sided prompting and rapid prototype projects

The ALDA project employs two approaches that I believe may help with the frontier invisibility problem and its effects. One is in the process, while the other is in the product.

The process is simple: Pick a problem that’s a bit more challenging than a solo prompt engineer could take on or that you want to standardize across your organization. Deliberately pick a problem that’s on the jagged edge where you’re not sure where the problems will be. Run through a series of rapid prototype cycles using cheap and easy-to-implement methods like prompt engineering supported by Retrieval Augmented Generation. Have groups of practitioners test the application on a real-world problem with each iteration. Develop a lightweight assessment tool like a rubric. Your goal isn’t to build a perfect app or conduct a journal-worthy study. Instead, you want to build a minimum viable product while sharpening and updating the instincts of the participants regarding where the jagged line is at the moment. This practice could become habitual and pervasive in moderately resource-rich organizations.

On the product side, the ALDA prototype I released in my last post demonstrates what I call “two-sided prompting.” By enabling the generative AI to take the lead on the conversation at a time, asking questions rather than giving answers, I effectively created a fluid UX in which the application guides the knowledge worker toward the areas where she can make her most valuable contributions without unduly limiting the creative flow. The user can always start a digression or answer a question with a question. A conversation between experts with complementary skills often takes the form of a series of turn-taking prompts between the two, each one offering analysis or knowledge and asking for a reciprocal contribution. This pattern should invoke all the lifelong skills we develop when having conversations with human experts who can surprise us with their knowledge, their limitations, their self-awareness, and their lack thereof.

I’d like to see the BCG study compared to the literature on how often we listen to expert colleagues or consultants—our doctors, for example—how effective we are at knowing when to trust our own judgment, and how people who are good at it learn their skills. At the very least, we’d have a mental model that is old, widely used, and offers a more skeptical counterbalance to our idea of the all-knowing machine. (I’m conducting an informal literature review on this topic and may write something about it if I find anything provocative.)

At any rate, the process and UX features of AI “BootCamps”—or, more accurately, AI hackathon-as-a-practice—are not ones I’ve seen in other generative AI training course designs I’ve encountered so far.

The equity problem

I mentioned that relatively resource-rich organizations could run these exercises regularly. They need to be able to clear time for the knowledge workers, provide light developer support, and have the expertise necessary to design these workshops.

Many organizations struggle with the first requirement and lack the second one. Very few have the third one yet because designing such workshops requires a combination of skills that is not yet common.

The ALDA project is meant to be a model. When I’ve conducted public good projects like these in the past, I’ve raised vendor sponsorship and made participation free for the organizations. But this is an odd economic time. The sponsors who have paid $25,000 or more into such projects in the past have usually been either publicly traded or PE-owned. Most such companies in the EdTech sector have had to tighten their belts. So I’ve been forced to fund the ALDA project as a workshop paid for by the participants at a price that is out of reach of many community colleges and other access-oriented institutions, where this literacy training could be particularly impactful. I’ve been approached by a number of smart, talented, dedicated learning designers at such institutions that have real needs and real skills to contribute but no money.

So I’m calling out to EdTech vendors and other funders: Sponsor an organization. A community college. A non-profit. A local business. We need their perspective in the ALDA project if we’re going to learn how to tackle the thorny AI literacy problem. If you want, pick a customer you already work with. That’s fine. You can ride along with them and help.

Contact me at michael@eliterate.us if you want to contribute and participate.

- Please see Josh Barron’s link at the end of the post; WordPress’s automatic formatting mangled some of the syntax from the prompt. Josh helpfully posted a link to a Google Doc that fixes the problems.

The post AI Learning Design Workshop: The Trickiness of AI Bootcamps and the Digital Divide appeared first on e-Literate.