Quantum Computing, Finally!! (or maybe not)

The Physics of Finance 2013-06-07

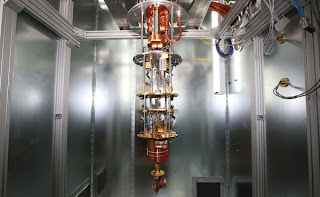

Today's New York Times has an article hailing the arrival of superfast practical quantum computers (weird thing pictured above), courtesy of Lockheed Martin who purchased one from a company called D-Wave Systems. As the article notes,

... a powerful new type of computer that is about to be commercially deployed by a major American military contractor is taking computing into the strange, subatomic realm of quantum mechanics. In that infinitesimal neighborhood, common sense logic no longer seems to apply. A one can be a one, or it can be a one and a zero and everything in between — all at the same time. ... Lockheed Martin — which bought an early version of such a computer from the Canadian company D-Wave Systems two years ago — is confident enough in the technology to upgrade it to commercial scale, becoming the first company to use quantum computing as part of its business.The article does mention that there are some skeptics. So beware. Ten to fifteen years ago, I used to write frequently, mostly for New Scientist magazine, about research progress towards quantum computing. For anyone who hasn't read something about this, quantum computing would exploit the peculiar properties of quantum physics to do computation in a totally new way. It could potentially solve some problems very quickly that computers running on classical physics, as today's computers do, would never be able to solve. Without getting into any detail, the essential thing about quantum processes is their ability to explore many paths in parallel, rather than just doing one specific thing, which would give a quantum computer unprecedented processing power. Here's an article giving some basic information about the idea. I stopped writing about quantum computing because I got bored with it, not the ideas, but the achingly slow progress in bringing the idea into reality. To make a really useful quantum computer you need to harness quantum degrees of freedom, "qubits," in single ions, photons, the spins of atoms, etc., and have the ability to carry out controlled logic operations on them. You would need lots of them, say hundreds and more, to do really valuable calculations, but to date no one has managed to create and control more than about 2 or 3. I wrote several articles a year noting major advances in quantum information storage, in error correction, in ways to transmit quantum information (which is more delicate than classical information) from one place to another and so on. Every article at some point had a weasel phrase like ".... this could be a major step towards practical quantum computing." They weren't. All of this was perfectly good, valuable physics work, but the practical computer receded into the future just as quickly as people made advances towards it. That seems to be true today.... except for one D-Wave Systems. Around five years ago, this company started claiming that it was producing and achieving quantum computing and had built functioning devices with 128 qubits. It used superconducting technology. Everyone else in the field was aghast by such a claim, given this sudden staggering advance over what anyone else in the world had achieved. Oh, and D-Wave didn't release sufficient information for the claim to be judged. Here is the skeptical judgement of IEEE Spectrum magazine as of 2010. But more up to date, and not quite so negative, is this assessment by quantum information expert Scott Aaronson just over a year ago. The most important point he makes is about the failure of D-Wave to really demonstrate that its computer is really doing something essentially quantum, which is why it would be interesting. This would mean demonstrating so-called quantum entanglement in the machine, or really carrying out some calculation that was so vastly superior to anything achievable by classical computers that one would have to infer quantum performance. Aaronson asks the obvious question:

... rather than constantly adding more qubits and issuing more hard-to-evaluate announcements, while leaving the scientific characterization of its devices in a state of limbo, why doesn’t D-Wave just focus all its efforts on demonstrating entanglement, or otherwise getting stronger evidence for a quantum role in the apparent speedup? When I put this question to Mohammad Amin, he said that, if D-Wave had followed my suggestion, it would have published some interesting research papers and then gone out of business—since the fundraising pressure is always for more qubits and more dramatic announcements, not for clearer understanding of its systems. So, let me try to get a message out to the pointy-haired bosses of the world: a single qubit that you understand is better than a thousand qubits that you don’t. There’s a reason why academic quantum computing groups focus on pushing down decoherence and demonstrating entanglement in 2, 3, or 4 qubits: because that way, at least you know that the qubits are qubits! Once you’ve shown that the foundation is solid, then you try to scale up.So there's a finance and publicity angle here as well as the science. The NYT article doesn't really get into any of the specific claims of D-Wave, but I recommend Aaronson's comments as a good counterpoint to the hype.