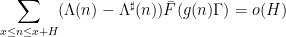

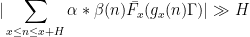

Kaisa Matomäki, Maksym Radziwill, Fernando Xuancheng Shao, Joni Teräväinen, and myself have (finally) uploaded to the arXiv our paper “Higher uniformity of arithmetic functions in short intervals II. Almost all intervals“. This is a sequel to our previous paper from 2022. In that paper, discorrelation estimates such as

were established, where

is the von Mangoldt function,

was some suitable approximant to that function,

was a nilsequence, and

![{[x,x+H]}](https://s0.wp.com/latex.php?latex=%7B%5Bx%2Cx%2BH%5D%7D&bg=ffffff&fg=000000&s=0&c=20201002)

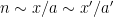

was a reasonably short interval in the sense that

for some

and some small

. In that paper, we were able to obtain non-trivial estimates for

as small as

, and for some other functions such as divisor functions

for small values of

, we could lower

somewhat to values such as

,

,

of

. This had a number of analytic number theory consequences, for instance in obtaining asymptotics for additive patterns in primes in such intervals. However, there were multiple obstructions to lowering

much further. Even for the model problem when

, that is to say the study of primes in short intervals, until recently the best value of

available was

, although this was very recently improved to

by Guth and Maynard

by Guth and Maynard.

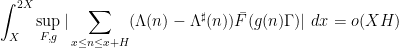

However, the situation is better when one is willing to consider estimates that are valid for almost all intervals, rather than all intervals, so that one now studies local higher order uniformity estimates of the form

where

and the supremum is over all nilsequences of a certain Lipschitz constant on a fixed nilmanifold

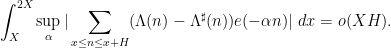

. This generalizes local Fourier uniformity estimates of the form

There is particular interest in such estimates in the case of the Möbius function

(where, as per the Möbius pseudorandomness conjecture, the approximant

should be taken to be zero, at least in the absence of a Siegel zero). This is because if one could get estimates of this form for any

that grows sufficiently slowly in

(in particular

), this would imply the (logarithmically averaged) Chowla conjecture, as I

showed in a previous paper.

While one can lower  somewhat, there are still barriers. For instance, in the model case

somewhat, there are still barriers. For instance, in the model case  , that is to say prime number theorems in almost all short intervals, until very recently the best value of

, that is to say prime number theorems in almost all short intervals, until very recently the best value of  was

was  , recently lowered to

, recently lowered to  by Guth and Maynard (and can be lowered all the way to zero on the Density Hypothesis). Nevertheless, we are able to get some improvements at higher orders:

by Guth and Maynard (and can be lowered all the way to zero on the Density Hypothesis). Nevertheless, we are able to get some improvements at higher orders:

- For the von Mangoldt function, we can get

as low as

as low as  , with an arbitrary logarithmic saving

, with an arbitrary logarithmic saving  in the error terms; for divisor functions, one can even get power savings in this regime.

in the error terms; for divisor functions, one can even get power savings in this regime.

- For the Möbius function, we can get

, recovering our previous result with Tamar Ziegler, but now with

, recovering our previous result with Tamar Ziegler, but now with  type savings in the exceptional set (though not in the pointwise bound outside of the set).

type savings in the exceptional set (though not in the pointwise bound outside of the set).

- We can now also get comparable results for the divisor function.

As sample applications, we can obtain Hardy-Littlewood conjecture asymptotics for arithmetic progressions of almost all given steps  , and divisor correlation estimates on arithmetic progressions for almost all

, and divisor correlation estimates on arithmetic progressions for almost all  .

.

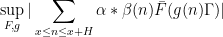

Our proofs are rather long, but broadly follow the “contagion” strategy of Walsh, generalized from the Fourier setting to the higher order setting. Firstly, by standard Heath–Brown type decompositions, and previous results, it suffices to control “Type II” discorrelations such as

for almost all

, and some suitable functions

supported on medium scales. So the bad case is when for most

, one has a discorrelation

for some nilsequence

that depends on

.

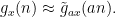

The main issue is the dependency of the polynomial  on

on  . By using a “nilsequence large sieve” introduced in our previous paper, and removing degenerate cases, we can show a functional relationship amongst the

. By using a “nilsequence large sieve” introduced in our previous paper, and removing degenerate cases, we can show a functional relationship amongst the  that is very roughly of the form

that is very roughly of the form

whenever

(and I am being extremely vague as to what the relation “

” means here). By a higher order (and quantitatively stronger) version of Walsh’s contagion analysis (which is ultimately to do with separation properties of Farey sequences), we can show that this implies that these polynomials

(which exert influence over intervals

![{[x,x+H]}](https://s0.wp.com/latex.php?latex=%7B%5Bx%2Cx%2BH%5D%7D&bg=ffffff&fg=000000&s=0&c=20201002)

) can “infect” longer intervals

![{[x', x'+Ha]}](https://s0.wp.com/latex.php?latex=%7B%5Bx%27%2C+x%27%2BHa%5D%7D&bg=ffffff&fg=000000&s=0&c=20201002)

with some new polynomials

and various

, which are related to many of the previous polynomials by a relationship that looks very roughly like

This can be viewed as a rather complicated generalization of the following vaguely “cohomological”-looking observation: if one has some real numbers

and some primes

with

for all

, then one should have

for some

, where I am being vague here about what

means (and why it might be useful to have primes). By iterating this sort of contagion relationship, one can eventually get the

to behave like an Archimedean character

for some

that is not too large (polynomial size in

), and then one can use relatively standard (but technically a bit lengthy) “major arc” techiques based on various integral estimates for zeta and

functions to conclude.

somewhat, there are still barriers. For instance, in the model case

, that is to say prime number theorems in almost all short intervals, until very recently the best value of

was

, recently lowered to

by Guth and Maynard (and can be lowered all the way to zero on the Density Hypothesis). Nevertheless, we are able to get some improvements at higher orders:

as low as

, with an arbitrary logarithmic saving

in the error terms; for divisor functions, one can even get power savings in this regime.

, recovering our previous result with Tamar Ziegler, but now with

type savings in the exceptional set (though not in the pointwise bound outside of the set).

, and divisor correlation estimates on arithmetic progressions for almost all

.

on

. By using a “nilsequence large sieve” introduced in our previous paper, and removing degenerate cases, we can show a functional relationship amongst the

that is very roughly of the form