Ben Green, Freddie Manners and I have just uploaded to the arXiv our preprint “Sumsets and entropy revisited“. This paper uses entropy methods to attack the Polynomial Freiman-Ruzsa (PFR) conjecture, which we study in the following two forms:

Conjecture 1 (Weak PFR over  ) Let

) Let  be a finite non-empty set whose doubling constant

be a finite non-empty set whose doubling constant ![{\sigma[A] := |A+A|/|A|}](https://s0.wp.com/latex.php?latex=%7B%5Csigma%5BA%5D+%3A%3D+%7CA%2BA%7C%2F%7CA%7C%7D&bg=ffffff&fg=000000&s=0&c=20201002) is at most

is at most  . Then there is a subset

. Then there is a subset  of

of  of density

of density  that has affine dimension

that has affine dimension  (i.e., it is contained in an affine space of dimension

(i.e., it is contained in an affine space of dimension  ).

).

Conjecture 2 (PFR over  ) Let

) Let  be a non-empty set whose doubling constant

be a non-empty set whose doubling constant ![{\sigma[A]}](https://s0.wp.com/latex.php?latex=%7B%5Csigma%5BA%5D%7D&bg=ffffff&fg=000000&s=0&c=20201002) is at most

is at most  . Then

. Then  can be covered by

can be covered by  cosets of a subspace of cardinality at most

cosets of a subspace of cardinality at most  .

.

Our main results are then as follows.

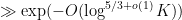

Theorem 3 If  with

with ![{\sigma[A] \leq K}](https://s0.wp.com/latex.php?latex=%7B%5Csigma%5BA%5D+%5Cleq+K%7D&bg=ffffff&fg=000000&s=0&c=20201002) , then

, then - (i) There is a subset

of

of  of density

of density  of “skew-dimension” (or “query complexity”)

of “skew-dimension” (or “query complexity”)  .

.

- (ii) There is a subset

of

of  of density

of density  of affine dimension

of affine dimension  (where

(where  goes to zero as

goes to zero as  ).

).

- (iii) If Conjecture 2 holds, then There is a subset

of

of  of density

of density  of affine dimension

of affine dimension  . In other words, Conjecture 2 implies Conjecture 1.

. In other words, Conjecture 2 implies Conjecture 1.

The skew-dimension of a set is a quantity smaller than the affine dimension which is defined recursively; the precise definition is given in the paper, but suffice to say that singleton sets have dimension  , and a set

, and a set  whose projection to

whose projection to  has skew-dimension at most

has skew-dimension at most  , and whose fibers in

, and whose fibers in  have skew-dimension at most

have skew-dimension at most  for any

for any  , will have skew-dimension at most

, will have skew-dimension at most  . (In fact, the skew-dimension is basically the largest quantity which obeys all of these properties.)

. (In fact, the skew-dimension is basically the largest quantity which obeys all of these properties.)

Part (i) of this theorem was implicitly proven by Pálvölgi and Zhelezov by a different method. Part (ii) with  replaced by

replaced by  was established by Manners. To our knowledge, part (iii) is completely new.

was established by Manners. To our knowledge, part (iii) is completely new.

Our proof strategy is to establish these combinatorial additive combinatorics results by using entropic additive combinatorics, in which we replace sets  with random variables

with random variables  , and cardinality with (the exponential of) Shannon entropy. This is in order to take advantage of some superior features of entropic additive combinatorics, most notably good behavior with respect to homomorphisms.

, and cardinality with (the exponential of) Shannon entropy. This is in order to take advantage of some superior features of entropic additive combinatorics, most notably good behavior with respect to homomorphisms.

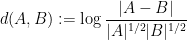

For instance, the analogue of the combinatorial doubling constant ![{\sigma[A] := |A+A|/|A|}](https://s0.wp.com/latex.php?latex=%7B%5Csigma%5BA%5D+%3A%3D+%7CA%2BA%7C%2F%7CA%7C%7D&bg=ffffff&fg=000000&s=0&c=20201002) of a finite non-empty subset

of a finite non-empty subset  of an abelian group

of an abelian group  , is the entropy doubling constant

, is the entropy doubling constant

![\displaystyle \sigma_{\mathrm{ent}}[X] := {\exp( \bf H}(X_1+X_2) - {\bf H}(X) )](https://s0.wp.com/latex.php?latex=%5Cdisplaystyle++%5Csigma_%7B%5Cmathrm%7Bent%7D%7D%5BX%5D+%3A%3D+%7B%5Cexp%28+%5Cbf+H%7D%28X_1%2BX_2%29+-+%7B%5Cbf+H%7D%28X%29+%29&bg=ffffff&fg=000000&s=0&c=20201002)

of a finitely-valued random variable

in

, where

are independent copies of

and

denotes

Shannon entropy. There is also an analogue of the Ruzsa distance

between two finite non-empty subsets

of

, namely the entropic Ruzsa distance

where

are independent copies of

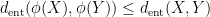

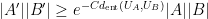

respectively. (Actually, one thing we show in our paper is that the independence hypothesis can be dropped, and this only affects the entropic Ruzsa distance by a factor of three at worst.) Many of the results about sumsets and Ruzsa distance have entropic analogues, but the entropic versions are slightly better behaved; for instance, we have a contraction property

whenever

is a homomorphism. In fact we have a refinement of this inequality in which the gap between these two quantities can be used to control the entropic distance between “fibers” of

(in which one conditions

and

to be fixed). On the other hand, there are direct connections between the combinatorial and entropic sumset quantities. For instance, if

is a random variable drawn uniformly from

, then

![\displaystyle \sigma_{\mathrm{ent}}[U_A] \leq \sigma[A].](https://s0.wp.com/latex.php?latex=%5Cdisplaystyle++%5Csigma_%7B%5Cmathrm%7Bent%7D%7D%5BU_A%5D+%5Cleq+%5Csigma%5BA%5D.&bg=ffffff&fg=000000&s=0&c=20201002)

Thus if

has small doubling, then

has small entropic doubling. In the converse direction, if

has small entropic doubling, then

is close (in entropic Ruzsa distance) to a uniform random variable

drawn from a set

of small doubling; a version of this statement was proven in an

old paper of myself, but we establish here a quantitatively efficient version, established by rewriting the entropic Ruzsa distance in terms of certain

Kullback-Liebler divergences.

Our first main result is a “99% inverse theorem” for entropic Ruzsa distance: if  is sufficiently small, then there exists a finite subgroup

is sufficiently small, then there exists a finite subgroup  of

of  such that

such that

This result uses the results just mentioned to relate

to a set

of small doubling, which can then be related to a subgroup

by standard inverse theorems; this gives a weak version of

(1) (roughly speaking losing a square root in the bound), and some additional analysis is needed to bootstrap this initial estimate back to

(1).

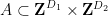

We now sketch how these tools are used to prove our main theorem. For (i), we reduce matters to establishing the following bilinear entropic analogue: given two non-empty finite subsets  of

of  , one can find subsets

, one can find subsets  ,

,  with

with

such that

have skew-dimension at most

, for some absolute constant

. This can be shown by an induction on

(say). One applies a non-trivial coordinate projection

to

. If

and

are very close in entropic Ruzsa distance, then the 99% inverse theorem shows that these random variables must each concentrate at a point (because

has no non-trivial finite subgroups), and can pass to a fiber of these points and use the induction hypothesis. If instead

and

are far apart, then by the behavior of entropy under projections one can show that the fibers of

and

under

are closer on average in entropic Ruzsa distance of

and

themselves, and one can again proceed using the induction hypothesis.

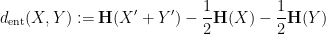

For parts (ii) and (iii), we first use an entropic version of an observation of Manners that sets of small doubling in  must be irregularly distributed modulo

must be irregularly distributed modulo  . A clean formulation of this in entropic language is the inequality

. A clean formulation of this in entropic language is the inequality

whenever

take values in a torsion-free abelian group such as

; this turns out to follow from two applications of the entropy submodularity inequality. One corollary of this (and the behavior of entropy under projections) is that

This is the key link between the

and

worlds that is used to prove (ii), (iii): while (iii) relies on the still unproven PFR conjecture over

, (ii) uses the unconditional progress on PFR by Konyagin, as detailed in

this survey of Sanders. The argument has a similar inductive structure to that used to establish (i) (and if one is willing to replace

by

then the argument is in fact relatively straightforward and does not need any deep partial results on the PFR).

As one byproduct of our analysis we also obtain an appealing entropic reformulation of Conjecture 2, namely that if  is an

is an  -valued random variable then there exists a subspace

-valued random variable then there exists a subspace  of

of  such that

such that

![\displaystyle d_{\mathrm{ent}}(X, U_H) \ll \sigma_{\mathrm{ent}}[X].](https://s0.wp.com/latex.php?latex=%5Cdisplaystyle++d_%7B%5Cmathrm%7Bent%7D%7D%28X%2C+U_H%29+%5Cll+%5Csigma_%7B%5Cmathrm%7Bent%7D%7D%5BX%5D.&bg=ffffff&fg=000000&s=0&c=20201002)

Right now the best result in this direction is

![\displaystyle d_{\mathrm{ent}}(X, U_H) \ll_\varepsilon \sigma_{\mathrm{ent}}[X] + \sigma_{\mathrm{ent}}^{3+\varepsilon}[X]](https://s0.wp.com/latex.php?latex=%5Cdisplaystyle++d_%7B%5Cmathrm%7Bent%7D%7D%28X%2C+U_H%29+%5Cll_%5Cvarepsilon+%5Csigma_%7B%5Cmathrm%7Bent%7D%7D%5BX%5D+%2B+%5Csigma_%7B%5Cmathrm%7Bent%7D%7D%5E%7B3%2B%5Cvarepsilon%7D%5BX%5D&bg=ffffff&fg=000000&s=0&c=20201002)

for any

, by using Konyagin’s partial result towards the PFR.

) Let

be a finite non-empty set whose doubling constant

is at most

. Then there is a subset

of

of density

that has affine dimension

(i.e., it is contained in an affine space of dimension

).

) Let

be a non-empty set whose doubling constant

is at most

. Then

can be covered by

cosets of a subspace of cardinality at most

.

with

, then

of

of density

of “skew-dimension” (or “query complexity”)

.

of

of density

of affine dimension

(where

goes to zero as

).

of

of density

of affine dimension

. In other words, Conjecture 2 implies Conjecture 1.

, and a set

whose projection to

has skew-dimension at most

, and whose fibers in

have skew-dimension at most

for any

, will have skew-dimension at most

. (In fact, the skew-dimension is basically the largest quantity which obeys all of these properties.)

replaced by

was established by Manners. To our knowledge, part (iii) is completely new.

with random variables

, and cardinality with (the exponential of) Shannon entropy. This is in order to take advantage of some superior features of entropic additive combinatorics, most notably good behavior with respect to homomorphisms.

of a finite non-empty subset

of an abelian group

, is the entropy doubling constant

is sufficiently small, then there exists a finite subgroup

of

such that

of

, one can find subsets

,

with

must be irregularly distributed modulo

. A clean formulation of this in entropic language is the inequality

is an

-valued random variable then there exists a subspace

of

such that